At Sentiance, our mission is to build AI technology that powers our clients' apps to improve people’s lives, from a personal, societal, and environmental perspective. Inspired by the latest data science and behavioral science developments, we are building the next generation of behavioral change platform based on mobile sensing and user context. Transparency, privacy, and ethics are our core values - use cases have to be clear, open, and beneficial for both businesses and users.

Our technology enables businesses to target and reduce the most harmful human behaviors through their mobile apps. Safer driving, leading a healthier lifestyle - quitting smoking, becoming more active, eating better, drinking less - will save thousands of lives every year and billions of dollars to society. One can help our planet by reducing the usage of unsustainable modes of transport, such as by car and flight.

Companies and institutions all around the world are now investing in technologies that incentivize and coach people towards such positive and sustainable behaviors. Besides their obvious benefits for mankind, the outcomes of those behaviors have clear business values. Car insurers prefer safer roads, healthcare companies want their customers to lead balanced and active lives, cities want citizens to use green modes of transport.

Sentiance technology enables those innovating players to improve and speed up the way they change behaviors. In return for providing low-level data, the users are incentivized to change their behavior in a way that will benefit themselves, their relatives, our society, and the environment.

A Behavior Change Platform

To change behavior, one needs an interface with the user to intervene and track progress. In our digital age, characteristics of such a platform are 1) scalability, i.e. the solution can be applied to small and large volumes of users seamlessly, 2) regular communication with users should be cheap and effortless, 3) user integration should be cost-effective.

A mobile app as a behavior change interface meets the three criteria [1]. Building such an app provides an extended armamentarium to behavioral scientists for implementing digital interventions through features such as metric visualization, feedback text, challenges, or gamification. Digital engagement can further be strengthened through nudging.

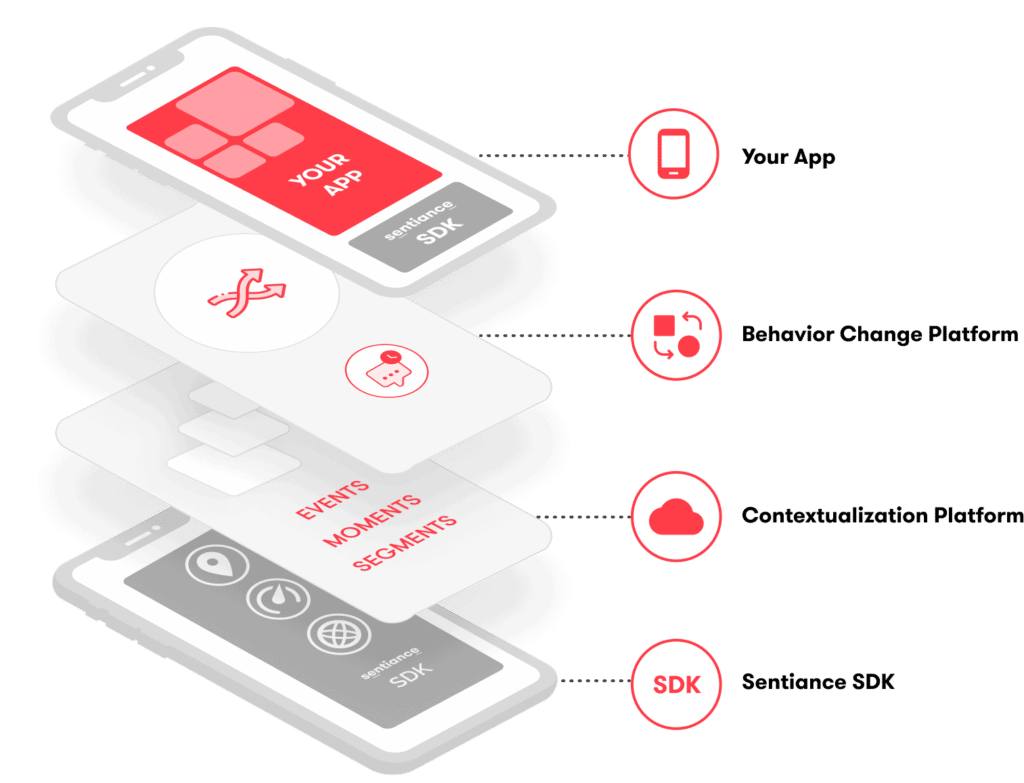

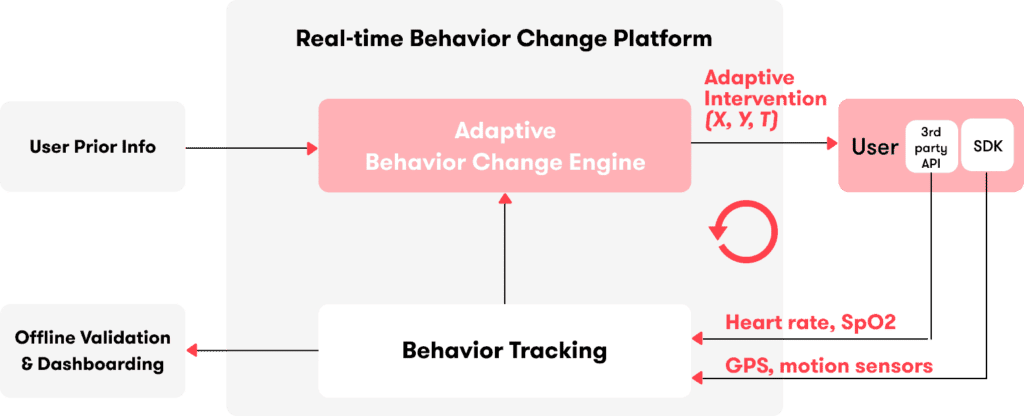

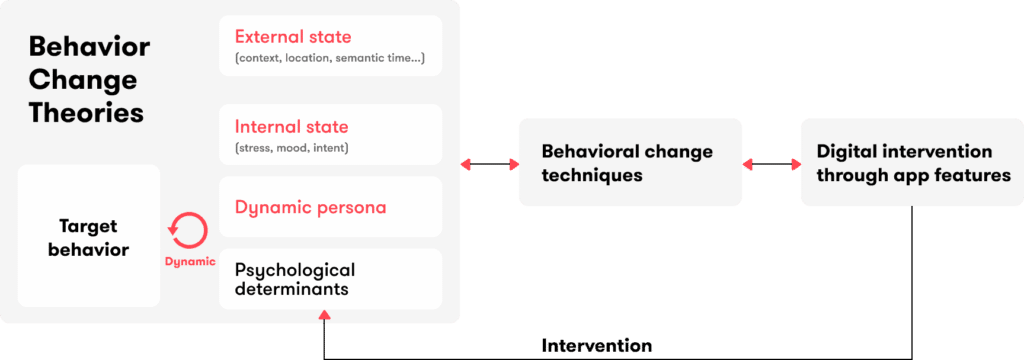

In this blog, we will describe the solution on top of which our clients can build their behavioral products (Fig.1). Our Behavioral Change Platform is based on digital interventions, mobile sensing and the Sentiance contextualization engine. It is designed to maximize change outcomes, independent of the use case or application.

Fig.1: Our behavioral change platform allows clients to build their apps on top.

Our solution is grounded in the latest behavioral science developments and leverages our state-of-the-art AI technology. Its design sought inspiration from the Just-in-Time Adaptive Intervention framework [2], and other recent technological approaches designed to efficiently drive behavior change [3, 4, 5].

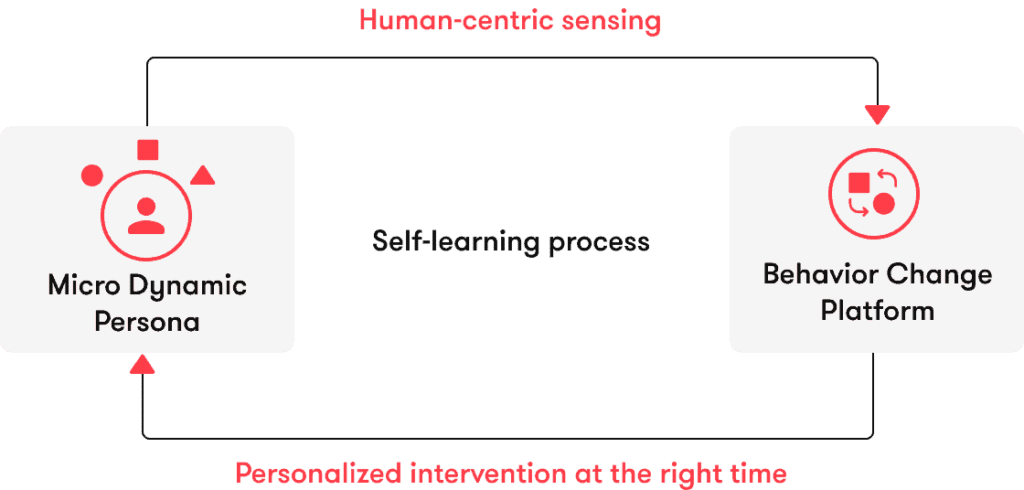

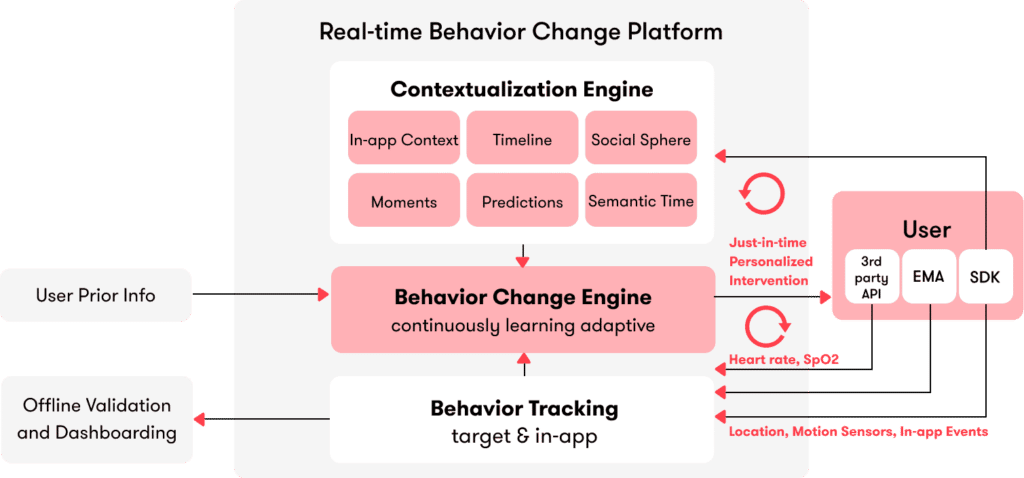

As we will describe in this blog, our Platform (Fig. 2) ingests human-centric sensor data streams and derives behavioral and contextual insights. Those insights are then combined to optimize the timing, type, and content of digital interventions. As time goes by, our platform learns the best strategy for each specific user, yielding a well-timed, personalized intervention strategy that maximizes business-relevant outcomes.

Our approach is user-centric. We recognize that each one of us is a unique persona per se, whose characteristics change over time. Our vision builds on a self-learning system that adapts to each individual’s micro-dynamic persona.

Fig.2: High-level representation of the Sentiance behavior change system: user data are collected automatically and fed into the platform which in turn provides optimized future interventions to maximize future outcomes. As time goes by, the system will learn the best strategy for each user.

Use-case definition

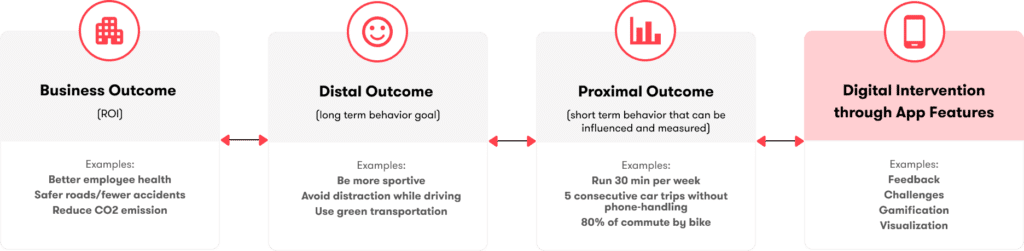

The process with our client (Fig.3) starts by identifying a use case where a change outcome benefits both our clients and their users. That business outcome does not need to be behavioral. It can either be independently defined by our client or through a collaboration between our client and our product and biz-dev teams.

Fig.3: Description of the process to convert a business-relevant behavior to a list of app feature candidates.

For instance, a health insurance company wants better health for its customer. That business outcome is clearly beneficial for both the company (fewer claims to pay) and the customer (a longer and higher quality of life).

A car insurance company wants safer roads, which is good for the customers themselves, or wants fewer cars on the road, which is good for the environment. For the insurance company, both business outcomes will lead to fewer claims to pay back, a clear return on investment.

While business outcomes, such as better health, safer roads, less carbon emission, are broad enough to get different stakeholders on board and define ROI, they are not actionable from an implementation perspective. We first need to define the long-term goal of the intervention, the distal outcome. Next, we must identify a proximal outcome that will mediate the distal outcome. The proximal outcome, which is the short-term behavior central to our change framework, is chosen so that it can be influenced using psychological methods and be measured using data science and engineering techniques.

In the case of health, regular physical activity is a distal outcome because it usually leads to better health. A proximal outcome could be to run at least 30 minutes per week: it is a step toward regular activity, we can coach people to achieve that goal, and it is possible to measure physical activity routines.

In the case of safer roads, eliminating phone handling while driving is a distal outcome: distracted driving increases the risk of an accident. 5 focused consecutive trips is a proximal outcome: it’s a step toward focused habits, we can use different behavior change techniques to decrease the behavior occurrence, and we can use machine learning techniques to detect whether someone is using her phone while driving.

From behavioral science to app features

Principles guiding the design of behavioral change interventions are grounded in behavioral science and are drawn from psychology, sociology, and behavioral economics. They take into account psychological, social, contextual, and societal factors, such as attitude, habits, social perception, to explain how behaviors develop and change over time.

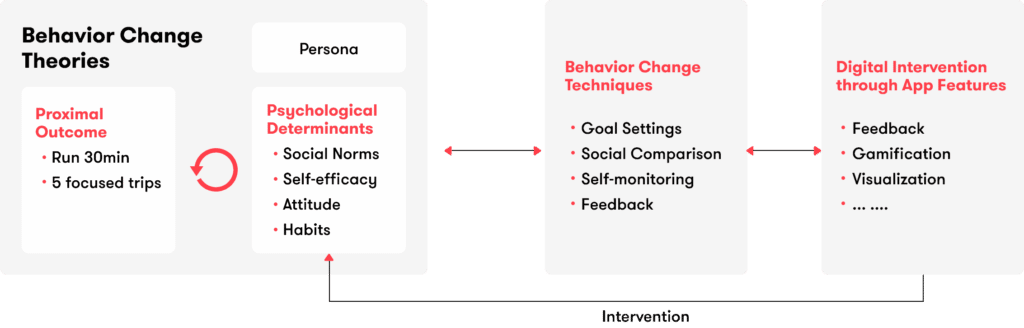

In a follow-up blog, we’ll detail the process of analyzing, modeling, and implementing a behavior change framework starting from a business-relevant use case. We’ll summarize the approach here (Fig.4).

Fig.4: Process to map proximal outcomes to app feature candidates. Behavioral scientists model the behaviors of interest, identify relevant change techniques, and select relevant app features.

Theories of change help in the design of interventions by describing the onset and dynamic of behavior change. Many theories exist, here are a few popular ones:

- The stages of change model, aka the transtheoretical model, assumes that people can be in different stages of change and readiness. Interventions will be tailored according to those stages: precontemplation, contemplation, preparation, action, maintenance, and termination.

- The social cognitive theory emphasizes the role of social perception, from observing and learning from others to positive and negative reinforcement.

- The theory of planned behavior assumes that people’s behaviors are determined by intention, which in turn depends on attitudes, subjective social norms, and perceived ability to change, aka self-efficacy.

- The Fogg Behavior Model assumes that behavior change depends on the simultaneity of three different factors: motivation, ability and triggers.

Such theories provide a framework to understand and model how the interactions between proximal outcome, persona, and psychological determinants can drive behavior change. Combined with in-depth research around the user population, it allows one to understand the target behavior and select a broad approach. Designing a specific behavior change methodology, i.e. selecting the right intervention using the appropriate design tools, can be guided by a standardized framework such as the COM-B behavior change wheel [6].

The behavior change techniques (BCTs) framework developed by Michie et al. [7] is widely used to design the components of the intervention. BCTs are defined as observable, replicable, and irreducible components of an intervention designed to change behavior. On the one hand, the BCT taxonomy maps BCT techniques such as “goal setting”, “social comparison”, “feedback on behavior” to theoretical models. On the other end, one can map BCTs to certain app features (Fig.4) [8].

As a result of their intervention strategy design, behavioral scientists are able to map a proximal outcome to specific app features. Note that a single app feature can contain multiple BCTs.

| Proximal outcome | BCTs | App features |

|

At least 2 hours of outdoor physical activity per week |

prompting | Push-notification |

| self-monitoring | Score visualization | |

| personalized messages | Push-notification | |

| goal-setting | Challenge screen |

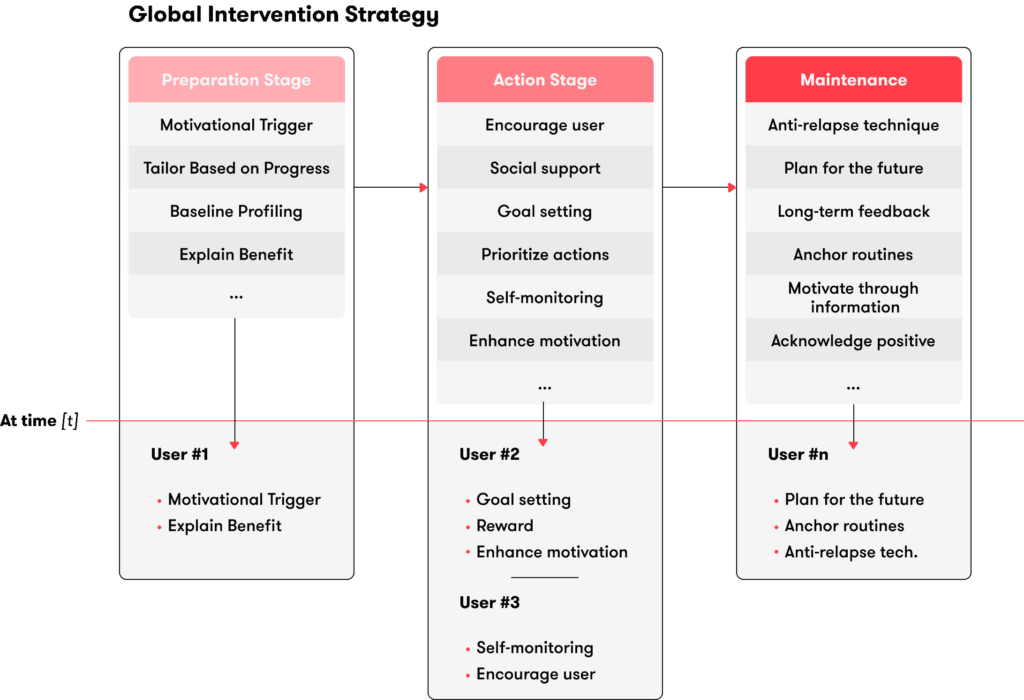

Considering the stage of the change model, behavioral scientists will end up with an extensive list of BCTs to be implemented through several app features at different stages of change. The possibilities are therefore numerous, e.g. in Fig.5. While the design is based on evidence from literature and experience, it is known that certain BCTs will work more or less efficiently for different users. This requires strategy personalization.

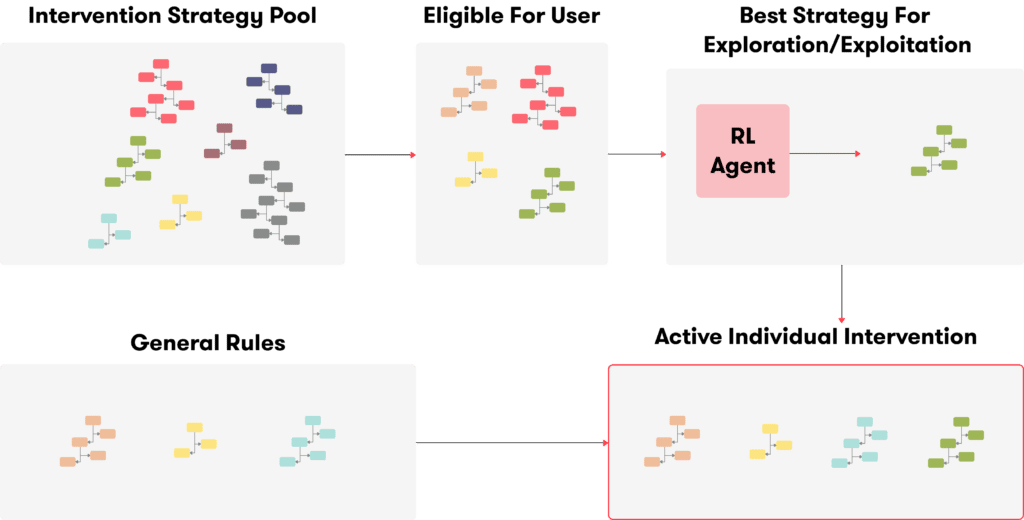

Fig.5: At every point in time, our platform will personalize digital interventions from a global list of component candidates.

As shown in Fig.5, Sentiance provides technology which, for each individual user,

- infers the user’s stage of change

- specifies the timing and the type of techniques/features to use and their content, out of a list of candidates, in order to maximize change outcome.

The three dimensions of digital intervention

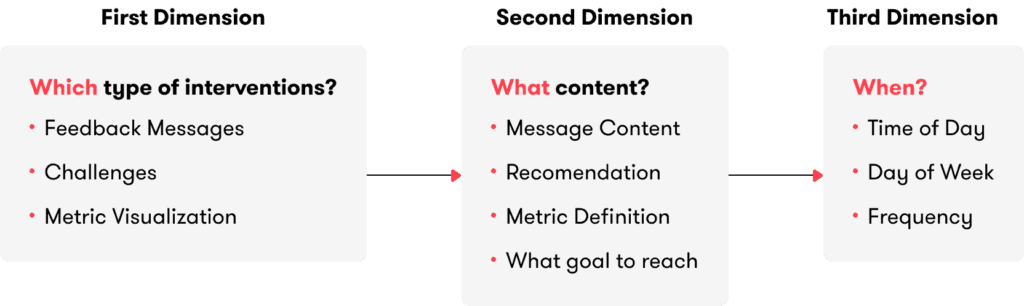

As we have seen, digital interventions consist of multiple components, i.e. different app features implementing different behavior change techniques such as feedback messages, challenges, or leaderboard screens. In order to understand how to build the most efficient “packaged” app, we need to first describe interventions at the component level. What are the characteristics involved in the design of a single digital intervention component? We describe a BC component as a 3-dimensional feature (Fig.6).

Fig.6: The three dimensions of digital intervention

We have already talked about the first dimension: which type of component should be used? Different BC techniques map to different app features. Listing the potential app features to choose from results from the behavioral change methodology described before.

The second dimension is: for a certain type of component, what content should we use? For a feedback messaging component, what should be the information provided? Content goes beyond text. If we want to show a score, how do we define that score? If we want to set goals, what goal should we choose? Design choices, variations in challenges, recommendation possibilities, subtleties in a certain kind of nudge, all are about choosing content for a certain component type.

The third dimension is the most straightforward: when should a certain component be delivered? In other words, when should we intervene, using a specific component type and content? At what frequency (how many times a day), how regularly (randomly or every hour)? As we will see, our solution provides the decision points, i.e. the just-in-time moments when the user is the most receptive to engage with the app and the more susceptible to change.

Note that a component can be punctual, i.e. an event in time, such as a push notification or an in-app message. Others can be long-lasting, such as challenges or score visualization. The difference is in their time scale; while a push notification will have hour time resolution, score visualization will have multiple-day time resolution, potentially being switched on and off several times during a campaign.

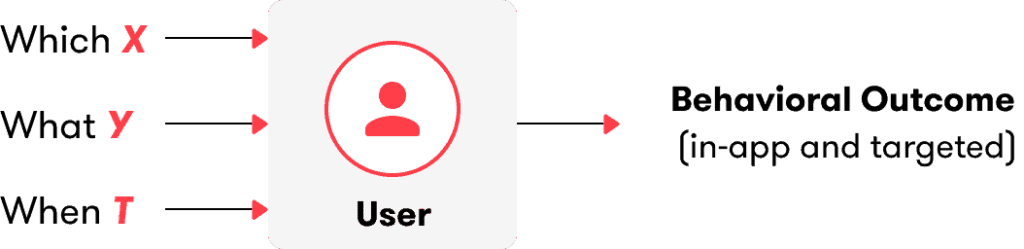

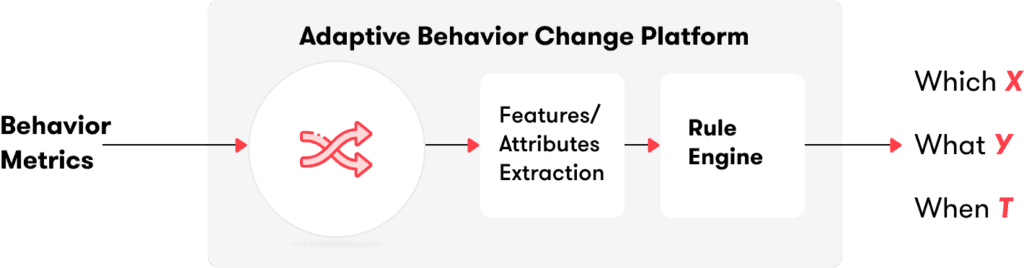

We cast our BC intervention as a system optimization problem. The user, at a certain point in time, is a cognitive and sociological (open) system with a certain (complex) state (Fig.7). One can apply a digital BC intervention on that system taking into account certain components of the intervention (i.e, the three dimensions described before: which (the first dimension); what (the second dimension); and when (the third dimension)).

Fig.7: A human user can be seen as a cognitive and sociological (open) system with a certain (complex) state. Every input intervention will yield behavioral outcomes that can be measured.

Our solution brings to our client a technology that optimizes the “which”, “what”, and “when” of an intervention component in order to maximize intervention outcomes. As we will describe later, that optimization is based on our AI tech stack and contextualization engine.

Measuring behavioral outcomes

Depending on the user’s intrinsic cognitive and sociological traits, and on their current mental state, the intervention will result in two different kinds of outcomes: the in-app behavior and the targeted outcome. For instance, if we nudge someone to go biking through push notification, the user will either open the notification or not (in-app digital behavior outcome) and if (s)he does, go biking or not (target outcome).

The user can therefore accept the intervention (i.e., open the notification and follow the advice), dismiss it (i.e., open it and don’t follow the advice because of her context), or ignore it (i.e., not open it as the user is not in a mobile receptive state).

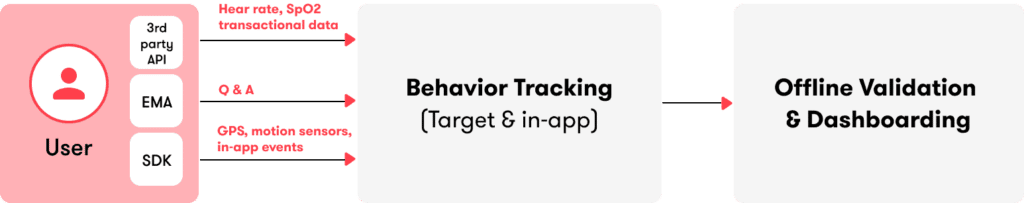

Optimizing component dimensions in order to maximize behavioral outcomes, i.e. having the user to accept interventions as much as possible, requires the design and implementation of a technology that accurately measures that outcome. In other words, intelligent technology is needed to quantify behaviors. Our platform supports the collection, ingestion, and processing of several human-centric data types (Fig.8); four sources of passive data, for which no user interaction is required, as well as one active source of data:

Passive data streams:

- Motion data (Sentiance SDK)

- In-app events (Sentiance SDK)

- Biomarkers (3rd-party APIs)

- Transactional data (client)

Active assessment:

- Experience sampling aka EMA

Fig. 8: Different data streams can be collected to measure and track different behaviors.

Passive behavior tracking

In order to avoid subjective and latency bias, as well as digital fatigue, the ideal system should be able to track behavior without the need for user interaction. The first body of data that we should collect is therefore passive, without any active inputs from the users.

The world can be sensed in multiple ways, allowing the collection of many different kinds of raw data. Raw data means that it is sensor-based, it has no semantics attached to it. Both domain knowledge as well as the latest advancements in Machine Learning are needed to extract useful insights.

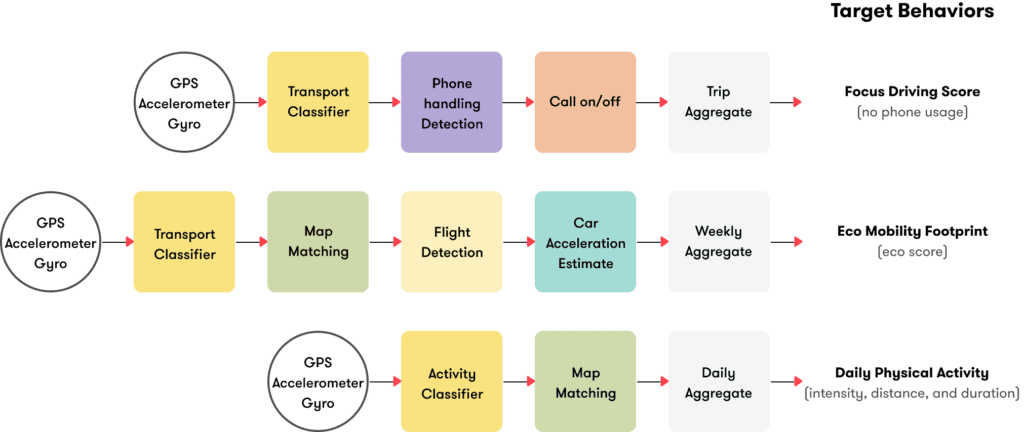

The first kind of data is called “Motion data”, mobile data such as location waypoints, accelerometer, and gyroscope streams. As explained later, that kind of data offers a wealth of information around the what, where, when, why, and who of the user's whereabouts.

For instance, as shown in Fig.9, our platform can quantify and track driving behavior, the use of eco-friendly transport modes, or their amount of physical activity. Any motion behavior as well as behavior linked to the frequency and kind of venue visits can be tracked with our core platform.

Fig.9: Examples of conversions from low-level data to relevant behavior metrics using the core Sentiance technology

Besides offline behavior insights, it is critical to understand the user in-app digital behavior. How often is the user opening the app, how long are they looking at a certain screen, what content triggers their attention most? All of those insights are useful to characterize digital engagement and fatigue. Sentiance provides app-developers an API to implement in-app behavior tracking that is sent to our platform via our SDK.

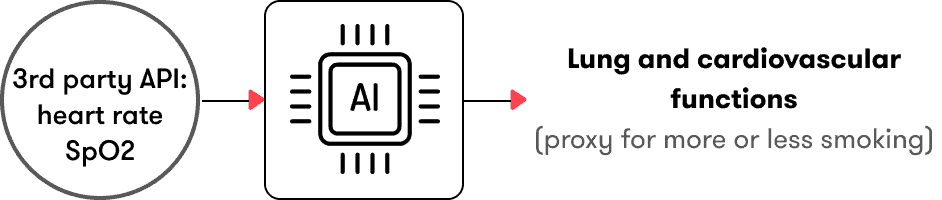

We don’t want our BC platform to be limited to what motion and in-app data stream can provide about behaviors. In order to cover more use cases, our platform supports the ingestion and processing of third-party data such as physiological markers (heart rate, skin conductivity, or blood oxygen saturation). More and more of those markers can be collected through commercial wearables. Our machine learning technology can be customized to convert such raw data into interesting insights such as emotional state, stress, mental energy, or lung functions (Fig.10). Sentiance technology can either ingest that data through its SDK via Google fit or Apple Health APIs or server-to-server via customized protocols.

Fig.10: Customized intelligence can be implemented to derive relevant insights from third-party data source

Another stream of data relevant for the quantification of certain behaviors is the transactional data that our clients provide us. Swiping a card, filing a claim, engaging with a product, those are all data points that can be collected and analyzed to track the progress of particular behavior. Our backend provides APIs to upload such data streams.

Active behavior tracking

The four previous data streams - motion, in-app, physiological, and transactional data - are passive assessments of behavior, they don’t require user’s inputs. While this is the most objective and scalable way of tracking behavior, feedback from the user is often useful. Hence, we allow for the integration of an efficient active assessment module (aka experience sampling or ecological momentary assessment (EMA)). Users can be prompted, at the right time during the day, with questions about their level of stress, tiredness, happiness, and so on. That data can be used in combination with passive insights to derive more complete behavior pictures.

Experimentation and validation

The first obvious use of this behavior tracking is the ability to design, implement, and conduct experimentation. Such a system allows one to go beyond standard cross-sectional experiments [5], where very few data points are available per user. High temporal resolution at the user level allows for precise longitudinal analysis that is very useful to validate adaptive intervention and conduct causal analysis [9]. Also, meaningful behavioral metrics, coupled with the flexibility of app feature design and deployment, allow behavioral and data scientists to study the efficacy of single components and optimize intervention packages [10]. This topic around innovative validation will be covered in another blog post.

Adapting intervention to current and past behaviors

Measuring digital and target behaviors/outcomes in real-time gives the system the opportunity to adapt its next interventions to current and past behaviors. By feeding back the behavior metrics to the platform, we can optimize the dimensions “which”, “what” of an intervention by analyzing the past and present behavior in order to maximize future changes (Fig.11).

Fig.11: Closing the first loop: feedbacking behaviors metrics to the platform allows the system to adapt to past and current behaviors in order to optimize future predictions.

Let’s open the box of the behavioral change engine we presented so far (Fig.12). Its input consists of behavior measurements (context will come later). Those measurements will be stored in a database to allow historical data analysis. Next, features combining multiple past and present measurements are calculated. Those features, which are depicted with a white box in Fig. 13, are processed by a rule engine, possibly chaining multiple rules, yielding a decision tree. Our decision engine is designed on top of a domain-specific language (DSL) in order to make it easy for data and behavioral scientists to create new features and implement their decision trees without the need for engineering resources.

Fig.12: The change engine consists of a database, a feature extractor, and a rule engine.

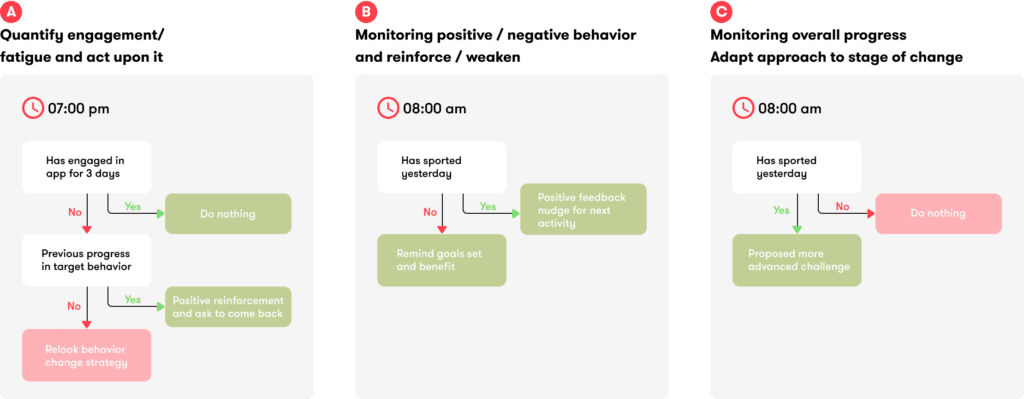

A first approach is to compare the desired target level to the one measured, implementing basic control engineering [11]. For instance, by tracking digital engagement or fatigue, one can adapt the strategy in order to maximize engagement (Fig. 13A). By tracking target behavior such as “biking more” and comparing it to a target value, the system can tweak the amount of incentive and kudos it delivers (Fig. 13B).

Fig.13: Examples of decision trees involving adaptation to current and past behaviors

On a longer timescale, the system can adapt the entire strategy to different progress milestones (Fig. 13C), implementing the transtheoretical model of change. For instance, if the user is in the contemplation stage, awareness techniques through feedback messages are delivered. In the action stage, incentives for physical activity practice and advanced challenges are better suited. Using active (through experience sampling) and/or passive assessment (through sensing), our system can automatically infer individual stages and trigger appropriate decision trees.

Adapting intervention to user context and changing profiles

Behavioral change is a complex process

Typically, the concept of persona is studied as a static representation of a subpopulation. Models of change including persona and personality traits are usually fed with those fixed insights before the start of a campaign. In reality, each individual is a persona on his own, evolving over weeks. We term that concept the micro-dynamic persona, a real atomic and dynamic division of each client’s target population.

Behavior change models can also integrate shorter-term processes such as internal user mental states like stress, mood, or momentary intent. A user won’t go biking if she is overly stressed, in a bad mood, or intending to go shopping. External personal factors can also play a critical role in the behavior change outcomes: weather, semantic time (user’s morning starts at 6 am), the purpose of action (commute to work vs holidays), location (home vs work vs supermarket), and so on.

Fig.14: Including more complexity in the behavior change models to better reflect reality

While it’s clear that context matters [11], there is less evidence-based research dealing with the effects of those highly dynamic, personal, and contextual factors on change of specific behavior. It is impracticable therefore to design in advance an intervention that would take into account those factors and be relevant for a whole population. Ideally, a system should learn how to adapt its strategy for every single user, based on the user real-time context.

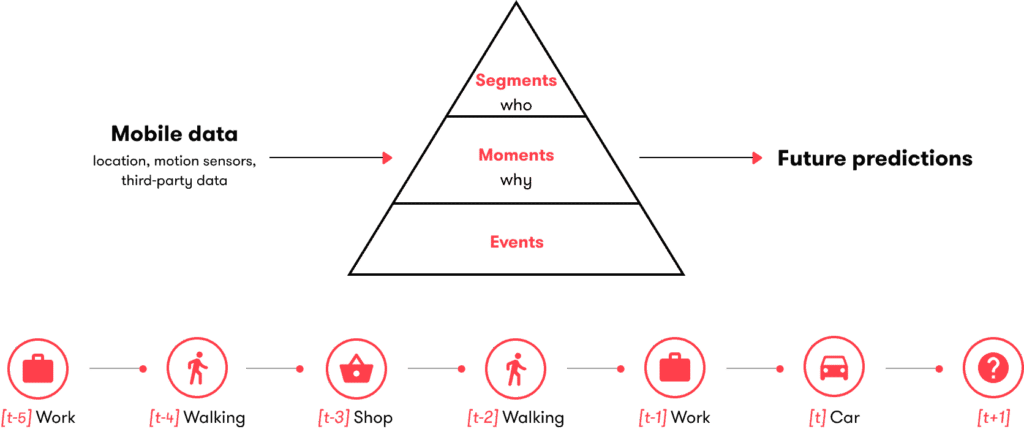

Sentiance contextual engine

Our contextual engine provides key complex behavioral insights. Our contextual engine (Fig.15) ingests low-level observed data collected while the user is on the move, such as location waypoints and motion sensors, e.g. accelerometer and gyroscope streams. More than 20 machine learning pipelines will convert that low-level data into actionable insights.

Fig. 15: Visualization of the processing performed by our contextualization engine and an example of a user timeline and its prediction.

The first layer of intelligence, called the event layer, extracts the context basics; if the user is stationary, are they at home, at work or in a specific venue (supermarket, gym, restaurant, theatre…)? If the user is on the move, what is their transport mode (biking, walking, bus, car, train…) and what trajectory are they following? If the user is in a car, how do they drive (hard acceleration, speeding, phone handling…), are they a driver or a passenger?

The second layer of intelligence, called the moment layer, looks at the user timeline to derive the purpose of a sequence of events, i.e. the why. For instance, a car trip can occur because the driver is dropping off their kids (home->car->school->car->), because they are commuting to work (home->car->work) or because they are a taxi driver. Someone can be at the supermarket because they are grocery shopping, having a drink, or working. See the corresponding doc for a complete list of available moments. New moments can also be created when relevant.

Another important concept is the semantic time. A morning routine doesn’t start at the same time for everyone: an early bird will wake up at 6 am, while a night owl might prefer to stay in bed until 9 am. Calculating individual semantic time is important in order to engage with a user at the right time.

Our contextualization engine provides predictions of future behavior of the user, both at the event and moment levels. We can predict that a user is going to take their car to go shopping or go on their weekly jogging exercise.

The short-term event and moment insights are aggregated on a 9-week basis to derive user profiles. Who is the user? Are they a workaholic, a parent, a motorway driver, a green commuter? Do they have limited social contact or physical activity? Are they a student, working from home, or retired? Are those life-stage characteristics changing? A list of all existing segments is given on our documentation page and new segments can be created for specific use-cases.

Adapting intervention to user context

All those behavioral insights, both short-term events and moments, as well as longer-term dynamic segments, are available to the decision engine to maximize behavioral change outcomes. A second loop is therefore created, feeding back context information that can be used as tailoring variables in the behavior change engine (Fig.16).

Fig. 16: The Sentiance behavior change platform: a second loop is added, feeding back contextual information to the engine in order to optimize future predictions.

Those context tailoring variables are combined in the decision engine to:

- Optimize intervention component type and content based on personalized insights.

- Optimize intervention timing to maximize digital and proximal target outcomes.

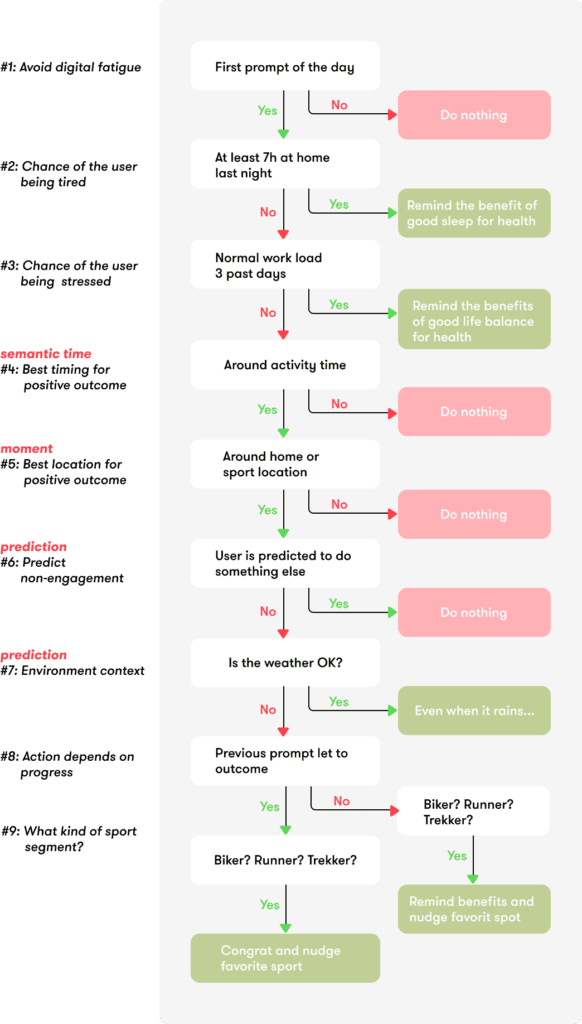

Let’s take a concrete example (Fig.17): we detected a user is in the stage of maintenance for physical activity practice. For the sake of simplicity, the type of intervention component is messaging through push-notifications. In Fig. 17, we can see the high-level questions a behavioral scientist could find interesting to implement in order to provide the right content for the right context. She would then work with a data scientist to implement those questions using features (white boxes) and rules (arrows).

In this example, the content is explicitly optimized based on context. The timing of the intervention is also optimized in the process. The rules including temporal features such as “Around activity time” explicitly encode timing information. Other rules involving the presence of the user at a certain location “Around home” or future prediction outcomes such as “predicted to do something else”, implicitly encode timing information and participate in the just-in-time optimization process.

With that tree, the intervention designer seeks to optimize intervention timing to maximize digital engagement (rules 1,8) and target outcome (rules 2,3,4,5,6,7), as well as contextualizing the intervention content (rules 2,7,9) based on timeline info (rules 2, 3), external factors (rule 7) and user segments (rule 9).

Fig. 17: Example of a decision tree that could be used for maintaining physical activity

Self-learning: finding the best strategy for each individual

The design and implementation of the intervention strategies described so far require expert knowledge. Behavioral scientists, based on evidence from the literature and from previous experience, will come up with a set of:

- general rules that could be applied for all users;

- intervention strategies and rules for their eligibility.

Both general rules and intervention strategies are decision trees, that contain high-level questions about the user context and behaviors. With the help of data scientists, these are implemented aiming to match high-level questions as closely as possible via available measurable metrics (proxies).

General rules are applied for all users, i.e. they would maximize the outcome independently of the profile of the user. “Don’t engage with the user when she is in the cinema” or “Don’t engage with the user when her semantic time is the night”.

Intervention strategies on the other hand are user-specific. First, they are filtered out based on eligibility, for example, “Engage with the user 20 minutes after arriving home” would be relevant if the user doesn’t have kids whereas “engage with the user during its evening routine” would be more relevant for parents. (While parents could be a segment detected by our contextualization engine, the underlying condition could be unknown to the experts or not measurable, and therefore must be learned by the system. In other words, our system should be able to find the latent variables that are responsible for positive responses and use them as tailoring variables.)

The behavior change engine depicted in Fig. 16 is a self-learning system that selects the best strategy from this subset for each individual and follows it until new data suggests that it is beneficial to make a switch.

Fig. 18: Intervention strategy pool and general rules are available to the intervention designer and decision engine in order to find the best strategy for each individual user.

Different techniques, such as control system engineering [12], multi-armed bandits [13], or reinforcement learning (RL) [14], can be implemented to solve this problem dynamically, i.e. by learning from the real-time user data stream. In an RL framework, the agent is responsible for optimizing the tradeoff between exploration, where intervention strategy is tested for a given user to gather more data and exploitation, where the best intervention strategy given current information is preferred.

While timing optimization is implicitly performed using our decision trees, specific machine learning techniques can also be used to predict additional decision points when the user exhibits a high probability of mobile receptivity [15].

Let's talk

Are you interested in learning more about how our behavior change platform can be used for your business? Get in touch today and let's have a chat.

References

[1] Zhao J, Freeman B, Li M, (2016) Can Mobile Phone Apps Influence People’s Health Behavior Change? An Evidence Review, J Med Internet Re

[2] Nahum-Shani, I., et al. (2017). Just-in-time adaptive interventions (jitais) in mobile health: key components and design principles for ongoing health behavior support. Annals of Behavioral Medicine

[3] Hekler EB, Michie S, et al. (2016) Advancing Models and Theories for Digital Behavior Change Interventions. Am J Prev Med. 2016

[4] Chevance, G., Perski, O, Hekler, EB (2020), Innovative methods for observing and changing complex health behaviors: four propositions, Translational Behavioral Medicine

[5] Wongvibulsin S, et al. (2020). An Individualized, Data-Driven Digital Approach for Precision Behavior Change. American Journal of Lifestyle Medicine.

[6] Michie, S., van Stralen, M.M. & West, R. (2011) The behaviour change wheel: A new method for characterising and designing behaviour change interventions. Implementation Sci

[7] Michie S, et al. The behavior change technique taxonomy (v1) of 93 hierarchically clustered techniques: building an international consensus for the reporting of behavior change interventions. (2013) Ann Behav Med.

[8] Courtney KL, et al., (2019) Applying the Behavior Change Technique Taxonomy to Mobile Health Applications: A Protocol. Stud Health Technol Inform

[9] Nahum-Shani, I. et al. (2020). SMART Longitudinal Analysis: A Tutorial. Psychological Methods

[10] Collins, L. M. (2018) Optimization of Behavioral, Biobehavioral, and Biomedical Interventions: The Multiphase Optimization Strategy (MOST). Springer

[11] Thomadsen, R. et al, (2018) How Context Affects Choice, Customer Needs and Solutions

[12] Hekler EB, et al. Tutorial for Using Control Systems Engineering to Optimize Adaptive Mobile Health Interventions. (2018) J Med Internet Res.

[13] Klasnja, P., et al. (2015). Microrandomized trials: An experimental design for developing just-in-time adaptive interventions. Health Psychology.

[14] J. A. Ramos Rojas, et al., Activity Recommendation: Optimizing Life in the Long Term. (2020) IEEE International Conference on Pervasive Computing and Communications