Handling late-arriving data on a streaming platform for contextualization

When context is king, time is queen. Clarified with an imaginary use case scenario, we address some fundamental questions that typically arise in a personalized motion intelligence technology:

- Why is computed context subject to change?

- Is there a difference between results from an API, firehose or offloads?

- How does Sentiance cope with late-arriving data?

Although you might think you live in the moment, your brain is constantly combining old and new experiences. A person’s perception of real-life events sometimes changes over time. Think about how in high school, you were unable to relate to your history teacher’s endless stories about Ancient Egypt. Now seeing the Great Pyramid of Giza with your own eyes may actually convince you that he really was a passionate person who just had problems convincing you of his ardor. Funny how you’ve forgotten all the details he told you about those ancient times, but back then, you found such information irrelevant.

Time changes everything. It’s the hard lesson you have to learn when building a platform for personalized motion intelligence technology. The Sentiance technology platform allows companies to create personalized services that improve people’s lives. We learn about the user by understanding patterns and habits in a digitalized representation of day-to-day life: the Sentiance timeline. This is a time sequence of different detected events and the starting point from which to add value in your personal journey through life. We interpret and analyze locations and routine transports in your journey. We can further enrich this timeline with additional context, such as determining driving scores for your car transports or revealing your mobility profile. And beyond that, we identify moments that describe routine activities, such as commuting to work, shopping, or a three-day business trip. This broader contextualized timeline leverages your user profile and leads to predictions and recommendations that can make your life smarter, in a highly personalized way and with full respect for your privacy. The origin is basic sensor data provided by that little pocket friend we all carry around- the smartphone.

But whatever happens to you today will not only impact your present context; it could have an influence on what we’ve already learned about you before. Any new insights can potentially ripple through to your past and towards your future because our platform builds up a memory and gets smarter by experience, just like humans. So time can change everything, including context, which is exactly what the Sentiance Platform is designed for. When context is king, time is queen.

From physical events to interpreted data

Let’s consider a simple timeline of real-life events that could happen to a random user installing the Sentiance Journeys app. We will use the following example throughout the text to illustrate how our technology constructs a virtual representation of a timeline that gradually evolves (yet improves!) over time. With every new low-level detection on the person’s smartphone, we learn more about the actual context and get closer to the ground truth.

Jon works for an international travel insurance group. For his work, he frequently prospects touristic places all over the world and is constantly pursuing the best personalized travel experience for his customers. He has been asked to install the Journeys app a week ago to test whether the business could benefit from Sentiance’s motion intelligence. During that week he was mostly busy at the office, coaching his staff and preparing for his upcoming trip to Egypt.

Monday:

Jon leaves home at 3.00 pm, slightly later than planned, so he has to rush to catch the flight to Cairo. Arriving at the airport, it turns out to be delayed, so with some time on his hands, he curiously checks the Journeys driving scores for his reported car transport to the airport, but apparently the results are not available yet.

Late in the evening, Jon arrives in Cairo and heads straight to the hotel to get a good night’s sleep, because the next few days will be exhausting.

Figure 1: On Monday, Jon travels to Cairo for his work and goes straight to bed upon arriving at the hotel.

Tuesday:

At 8:00 am during breakfast, Jon connects to the hotel’s wifi with his smartphone. When he finishes his first coffee, he notices that yesterday’s driving scores are now available. It looks like he could use some driver coaching, but at least Jon’s scores are still better than the taxi driver’s.

For the remainder of the day, he has meetings with business partners at several historical and cultural venues.

Wednesday and Thursday:

More meetings. The great Pyramid of Giza is on the list. Forty centuries and his history teacher look down on him.

Figure 2: From Tuesday to Thursday, Jon leaves the hotel in the morning, visits several tourist venues in Cairo and gets back to the hotel in the evening.

Friday:

Jon arrives back home, connects to the wifi network and evaluates his Sentiance timeline in Journeys. This time the driving scores for his latest car transport from the airport are readily available. Funny, with his type of job he is not surprised to see that Journeys has marked his week abroad as a leisure trip.

Figure 3: On Friday, Jon flies back home.

… three weeks later on a Brazilian beach:

After yet another long day of meetings and networking events, Jon sips from a well-deserved cocktail and looks back at his Sentiance timeline. He is happy to see that both his current context as the previous trip to Egypt are now correctly classified as a business trip. Time changes everything.

Figure 4: Three weeks later, Jon starts another business trip, to Brazil.

The above example illustrates how computed results are subject to change, for several natural reasons:

- Due to restrictions in connectivity, the time to reach the Sentiance Platform may differ for different data types (e.g. sparse location fixes vs fine-grained sensor signals);

- Future detections can impact historical context (e.g. visited venues initially suggest the larger context of an ongoing leisure trip); and

- Optionally, manual user feedback might even be allowed to overrule decisions from the past.

In the remainder of this article, we will elaborate on the rationale behind changing results. First, let’s follow the data flow.

Remote observations

The Sentiance Platform interprets daily human activities in real-time and recreates a rich timeline of contextual moments and relevant behavioral profiles. The first step in the processing pipeline is data collection on smartphones, or optionally other hardware devices. Digital observations are made with all available detection methods for which the user has given permission, such as accelerometer, gyroscope, GPS system, etc. The collected data is sent to the Sentiance Platform for a thorough analysis: the more measurements, the more accurately it can learn.

Looking inside the self-learning black-box

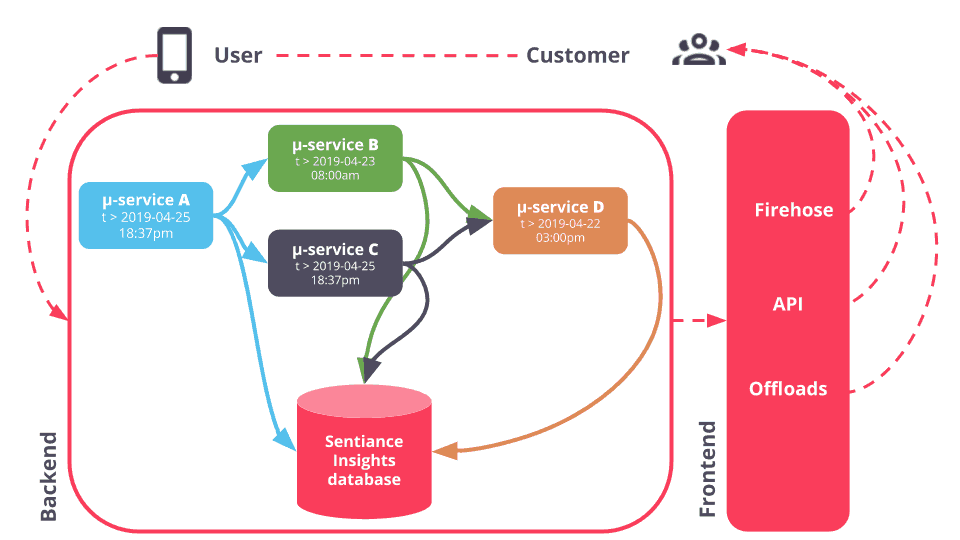

The backend of the Sentiance Platform is built around a streaming architecture. As soon as the collected data arrives, it immediately triggers a network of processing units, called microservices, which start evaluating the newly achieved information to update the user’s context, i.e. what he is doing, why he is doing it and what we predict will happen next. Each microservice is a containerized software component that is responsible for a piece of the Sentiance intelligence, e.g. deriving the transport mode for a transport from low-level sensor data, reconstructing a detailed trajectory out of sparse waypoints, detecting commutes or even predicting when the user will go to the gym, if needed.

When one microservice outputs new results, then other microservices react on them if those results are relevant to their respective domains. It’s as simple as a classroom full of well-behaved children that are all eager to help the teacher with his own chores.

Bringing insights back to the user

We currently offer three output channels to retrieve the user context and other insights that were computed out of the raw digital observations: API to query for the most recent available results, firehose for light-weight push notifications and offloads for a (scheduled) snapshot of all results.

Our API makes interpreted results available through a GraphQL query language. It is the main access point to our database of insights that is continuously updated with the latest available results in all different domains, as produced by the corresponding microservices in the backend.

The firehose mechanism notifies the customer on a webhook, as soon as new or updated results are available. These light-weight messages are mainly meant to trigger further action on the customer’s side. If necessary, the customer can then fetch more details about this analysis through the API.

When customers are interested in a recurring extract of the latest available results, we provide them with offloads. An offload is a snapshot of results for a specific range in a user’s timeline. It is uploaded to a shared file storage system, basically as if the customer would have a scheduled API query running.

Figure 5: The Sentiance Platform backend is a streaming architecture with microservices as main building blocks. Each microservice computes results within a specific domain, such as transport mode classification or driving scores. Three frontend interfaces are available for the customer, to link back results to end-users: API, firehose and offloads. Results from microservices can apply to different points in time in the user’s timeline.

Delayed results

We offer customers the three endpoints described above as different ways to extract insights from the Sentiance Platform. Depending on how they are used or how they are integrated in another technology, results might seem out of sync. The API always gives the latest available updates. This implies that when a customer reacts to a notification from the firehose, it could be that the result has already been updated by the time the API is queried for more details. Or similarly, the offloads are most of the time slightly outdated for recent events when compared to the latest results from the API. As we will see in the next section though, when time passes, older insights will not change anymore and so results from offloads and API will then be consistent. But from the use case with the travel business trip, it is clear that there are more reasons for delayed results. When Jon checks his Sentiance timeline, his Journeys app always fetches the latest available results from the API, but still he had to wait until the next day before he saw driving scores for car transports made on Monday.

Inherently, all detections from a smartphone arrive with a delay on the Sentiance Platform. There are several external causes for the varying latencies between the actual physical user event and arrival time of the payload with the digitized observation of the event. This ranges from hardware limitations on the smartphone to slow mobile network connectivity or even complete outages.

During Jon’s transport from home to the airport, our transport classification microservice already receives live location fixes, sent over mobile data. Based on speed and environmental context it concludes that Jon is driving a car. However, the most detailed sensor detections from gyroscope might be too large in data size for Jon’s mobile subscription and so they are buffered on the smartphone until they can be sent over wifi the next day in the hotel at 8:00 am. So as early as Tuesday morning our microservice that’s responsible for translating sensor signals into driving scores updates Jon’s timeline for Monday. When Jon arrives home on Friday his phone immediately connects to his own wifi and driving scores are returned within minutes after the car transport from the airport.

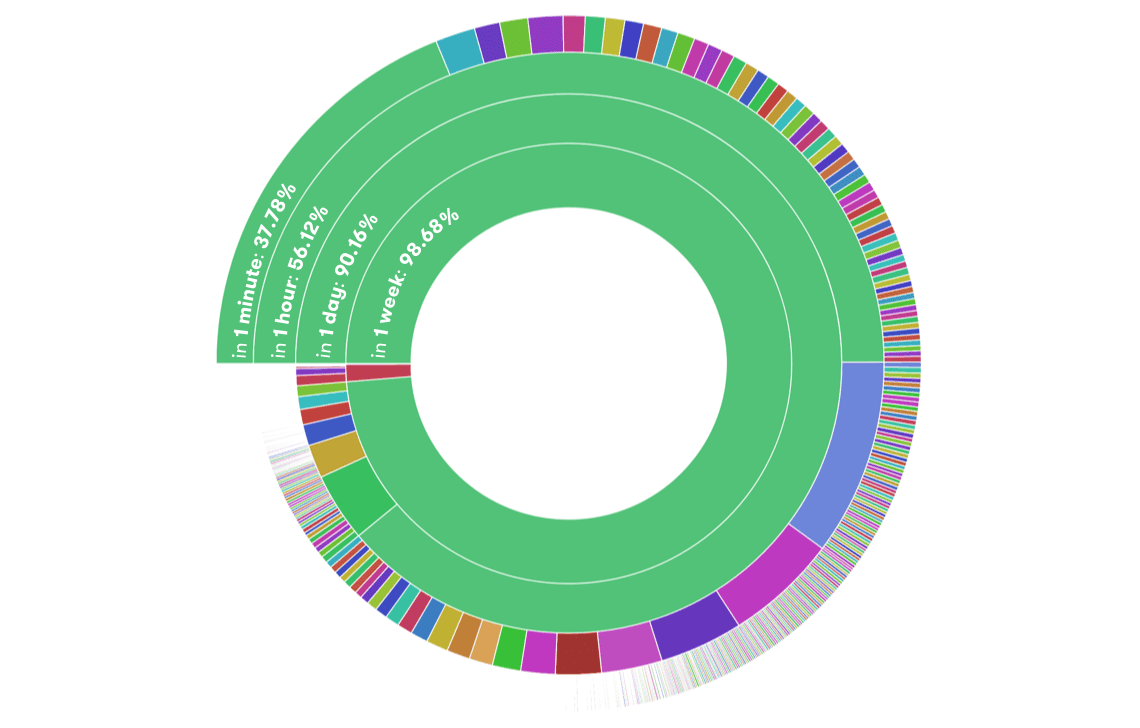

Figure 6: Reported latency between the end of a transport and arrival on the Sentiance platform of the sensor data, that is required to compute driving scores. The count distribution is shown, in intervals of an hour, for all transports that took place in April 2019 as detected by the Sentiance Journeys app.

56.12% of the sensor data arrived within an hour (green segment), another 11.45% was received in the second hour (blue segment), 6.52% in the third hour (magenta segment), 4.83% in the fourth hour (purple segment), etc. By default, in the Journeys app, we wait for a wifi connection to send the sensor data, which explains the significant number of driving scores that come with a delay of more than an hour. For customers who need the driving scores faster, we can reduce these delays by sending this particular type of payload directly over mobile data.

Figure 7: Extended report of the one in Figure 6. From outer to inner circles, the count distribution is shown in intervals of a minute, an hour, a day and a week respectively. The larger green segment on each circle represents the portion of sensor data that arrived in the first corresponding interval: 37.78% within a minute (outer circle), 56.12% within an hour (second circle), 90.16% (third circle) within a day and 98.68% within a week (inner circle). A larger circle can be considered as a more detailed view of the smaller circles, that further zooms in on the distribution over smaller intervals.

In addition, newly arriving detections might influence results that have already been computed based on older detections. One practical example is the distance traveled for a transport that is still ongoing. As the user is still moving and more location fixes arrive on the Platform, the distance naturally grows. But of course, the more complicated insights can also be modified over time, like the purpose of a trip abroad, the detection of a lunch break moment or whether a user is a green commuter.

Trying to get a grip on the user’s reality, we constantly have to keep in mind that results are subject to change. But for how long can results still change? Eventually, when all the cards (read: data) are on the table (read: Platform), we should draw a line somewhere. In the next section, we will introduce a basic rule that is key in this discussion at Sentiance.

The consequences of time travel

What if you could travel back in time to change something in the past? When you arrive back in the present, your world might look different than before due to the modifications you made. This would certainly have benefits; but Hollywood has shown us many movies warning us about the devastating consequences too.

The Sentiance Platform is like a virtual universe of parallel timelines for hundreds of thousands of users. In this universe, our ultimate objective is an accurate reconstruction of a user’s real-life, by continuously updating his or her virtual Sentiance timeline based on new input from digital detections. In this perspective, our microservices can be viewed as an army of time travelers that can make modifications in a user’s virtual past, from a split-second ago to maybe days or even weeks back. So we must be well aware of the consequences of time travel.

Figure 8: Parallel timelines with the detected context changes in the use case scenario. Jon has been visiting the Great Pyramid, among other historical sites, and so after a few days, our Platform decides that he started a leisure trip on Monday. With his job in a travel insurance group, it actually turns out to be a business trip, so again Jon's context for his virtual Monday gets altered. Of course, Jon is a brand new user on our Platform, who moreover did regular commutes during his first week and the weeks after his Egypt trip. At the start, we were still learning his habits, so give us some credit on this. At least we quickly excluded the possibility that Jon moved to Cairo on Monday.

When we zoom out of the Sentiance Platform again and look at it from the outside as a black-box, we want it to meet an important high-level characteristic to cope with the concept of time:

It must be clear when results are final and not subject to change anymore.

One motivation for this follows immediately from several customers’ expectations. The customer’s business logic sometimes requires a steady state for the insights that Sentiance provides in an integrated technology. Improvements of initial results are usually allowed, but a customer ideally also gets a guarantee on when certain results are final, e.g. for internal reporting or billing purposes.

Another motivation for this characteristic is timely removal of user data. Although it might seem less important from a pure business perspective, proper data retention still has a significant impact on security, privacy, and pricing. Sentiance focuses on data mining in the first place and not data warehousing. User data should only be stored for as long as it needs to be available for querying or as long as it can still impact other results. So knowing which results won’t change anymore allows us to keep data storage to a minimum. For example, it might not make sense to keep all of a user’s historical car transports, when a customer is only interested in holiday destinations. Of course, technically it is perfectly possible to keep them, but it comes with significant trade-offs such as increased cost for storage and operations.

Also here, the metaphor of the human brain holds. Our own memory is not persistent for all the small things in life. On the one hand, we selectively remember detailed observations and they can determine how we interpret new experiences, or draw conclusions, for years later. On the other hand, a lot of everyday-life events are considered irrelevant but they still add up to a certain general feeling or insights. Again, Jon might not exactly recall all those history stories from high school, and only a few of them may actually be of relevance to him. But he definitely learned what drives passionate teachers to opt for his travel insurance when they want to explore the world.

Time travel rules for microservices

How does the abstract statement, that it should be clear when results are final, practically translate to our microservices which are the building blocks of the Sentiance Platform? They all contribute to the virtual timeline of a user in their own isolated domain. So in order to fulfill our high-level Platform characteristic, all microservices must follow the same implementation rule: explicitly expose how far in the past modifications are made. With this simple rule in each microservice we can achieve our main Platform objective. In order to clarify the idea, we need to introduce some common terminology first.

Every time we make a digital observation of a real-life event, such as a GPS location fix or an acceleration sensor signal, we add a timestamp of when the measurement was registered on the user’s smartphone. This actual physical time is what we call event time.

On the other hand, we use the term processing time for the timestamp at which a computed result is produced by a microservice. Unless the output is a prediction, the processing time will by definition always be later than the event time.

In our business trip example, for Jon’s car transport to the airport this means:

- We take the start of the transport as its event time, which is Monday 03:00 pm.

(For the sake of the discussion, we will refer to Jon’s local time; in practice, all timestamps are internally standardized in UTC time.) - A stream of GPS location fixes is sent to the Platform over Jon’s mobile subscription, during his transport. The transport mode is then continuously re-evaluated and so its processing time keeps on increasing to a few minutes after Jon’s arrival at the airport. This doesn’t affect the event time, which stays at 03:00 pm.

- The processing time for the driving scores of that same car transport is on Tuesday, a few minutes after 8:00 am, when Jon has connected to wifi and the detailed sensor data has been analyzed for the first time. The same holds for the evening taxi ride from the airport.

- Now the processing time for the transport mode moves to Tuesday morning as well because it is re-evaluated one last time based on the richer sensor data. Although in this case, the microservice for transport mode classification stays with its original decision that Jon was in a car on Monday.

Finally, we define the event horizon of a microservice as the lower bound on the event time for all future updates that will be produced by that microservice. This means that the event horizon acts as a sliding marker on the user’s virtual timeline and guarantees that all results before that time are final and will not be updated anymore by the corresponding microservice. As if our time traveler is not allowed to go further back in time beyond this point to make modifications. As time evolves, we demand that an event horizon can only increase; never decrease. This concept of event horizons, or a variant of it, is well-known and commonly used in streaming architectures, often referred to as watermarking.

But how can we guarantee such a lower bound? Note that we can view the Sentiance SDK on a user’s smartphone as the very first microservice in the pipeline that is responsible for generating payloads of digital observations. It buffers these payloads on the device until they have been successfully transferred to the Sentiance Platform backend. We can then naturally introduce an event horizon for the SDK. Indeed, the event time of the oldest detection in the buffer, waiting for an acknowledgment of receipt, defines this lower bound on event time for SDK payloads. In our example, the large sensor data keeps the event horizon for the SDK on Monday until the payload is successfully sent to the Sentiance Platform, together with the sensor data for the taxi ride. Only then does the event horizon for the SDK move to Tuesday, to the time were Jon connects to wifi and all SDK buffers can safely be flushed.

Now we can apply the concept of an event horizon in a recursive way to all downstream microservices in the pipeline. Indeed, each microservice in the Platform listens to one or multiple input streams that are already marked with a sliding event horizon. The microservice runs its business logic on the input data and produces updates on the virtual timeline within its own domain. It is the combination of this business logic and the upstream event horizon(s) that determine a lower bound on the microservice’s own output stream of timeline changes, see also Figure 5 for an illustration. Some microservices need to keep their event horizon consequently at an older timestamp than the upstream microservices that they consume from, because their logic has an impact further in the past. In addition, such microservices might need to maintain a time window of their input data in-memory. In that case, a natural retention rule for the data with an old event time can explicitly be derived from their own event horizon and the upstream event horizon(s).

In our example use case, the microservice that classifies Jon’s journey as a leisure trip searches for certain patterns in his timeline. Therefore it keeps a local state of visited locations and transport modes of the past few weeks. Initially, the microservice indicates Monday 03:00 pm as an event horizon, when Jon leaves home, because he definitely was not on a journey before that point in time. As soon as Jon has visited some historical sites abroad a few days later, the microservice detects a leisure trip, but it does not move its event horizon yet, because it might still come back on that decision. Meanwhile, the event horizon for the upstream microservice for transport mode classification has already been updated from Monday 03:00 pm to at least Tuesday 8:00 am because all sensor data for Jon’s car transports before that time has been received and processed, as guaranteed by the SDK’s event horizon.

The concept of low-level event horizons allows us to clearly indicate whether the result from a microservice is final or still subject to change. And thus by extrapolation, since the entire Sentiance Platform is built as a network of connected microservices, it automatically meets the required characteristic.

Conclusions

At Sentiance we use digital observations on people’s smartphones to derive a rich contextualized timeline of events and moments. The accuracy of the computed insights is time-dependent. At a given point in time, we might not have received all relevant detections because of restrictions in connectivity or even because future events can still influence the results. We strive at keeping these delays on the results to a minimum with a streaming architecture that is designed to react immediately on any new piece of information as soon as it arrives on the Platform.

By recursively keeping track of lower bounds in time, i.e. event horizons, for result updates we achieve a valuable characteristic on the Platform: it is clear when results are not subject to change anymore. This opens the door to a transparent integration with the customer’s business logic or technology, and allows for tailored data storage optimization.

In essence, you could compare this mechanism to the way Jon, from the use case scenario, willingly forgets the details of those endless history stories because he has drawn his own conclusions and considers them quite useless in life after high school. Just like you probably won’t remember each and every word in this article, but it may still turn out to have a massive influence on the way you’ll read all future Sentiance articles. At least hopefully you will comprehend why our Journeys app initially reported Jon’s first business trip as a holiday, to swiftly readjust it as soon as we have gained advanced insight in his routines. If Jon had installed Journeys one month earlier, the reporting would immediately have been exact.

Like always, the longer the Sentiance platform can evaluate the data, the better it gets to know you: granting you crucial new insight, with the added benefit of hindsight.

On privacy and Ethical AI

There is an opportunity for businesses to use data and AI to build experiences, products, and services that genuinely help people live safer, healthier and more convenient lives. At the same time, it is more than ever important to address the privacy risks associated with profiling and automated decision-making practices. The European Data Protection Regulation, better known as GDPR, helps to deliver that experience by requiring businesses to apply basic ethical principles to the way they collect, manage and store personal data and to give consumers more control over their personal data. Sentiance embraces GDPR and participates in ethical AI activities such as the European Commission initiative to pilot the assessment list of the Ethics Guidelines for Trustworthy AI.