Introduction

The car insurance industry is rapidly transitioning from traditional fixed fee insurance packages to usage based insurance (UBI). With recent car insurance cost surges, insurance companies are starting to realize that the majority of well behaved customers shouldn't have to pay for the excesses of the few.

Traditional UBI approaches such as Pay As You Drive (PAYD) are gaining popularity, as customers only pay for the miles they drive. However, even within this customer segment, pricing is based on general statistics that might not apply to the majority of drivers. For example, men pay on average $15,000 more for auto insurance during their lifetime compared to women, and young drivers have difficulties to find affordable insurances world wide.

More recently, a second type of UBI has entered the market in the form of Pay How You Drive (PHYD) packages. Insurance companies provide black box devices that are mounted inside the car, and use a bunch of high end sensors to monitor your driving behavior. Based on your driving aggressiveness, traffic insight and anticipation, and speeding behavior, prices can be adapted to the individual. As a result, the majority of customers will see their fees go down, whereas the few aggressive drivers will notice an increase.

Despite the obvious advantages of PHYD, such black boxes come with several disadvantages too: High cost of installation and maintenance, no distinction between drivers (i.e. cars are often shared between family members), no opportunity for personalized driver coaching, and no way of augmenting the data with important context (e.g. did the driver just spend a few hours in a bar? Was the driver using his phone while driving?).

At Sentiance, we developed a sophisticated platform that allows us to leverage smartphone sensors to overcome these disadvantages. Smartphones contain the exact same sensors as costly black boxes, namely accelerometer, gyroscope, magnetometer and GPS sensors. These sensors are used by the phone to automatically flip the screen when the phone's orientation changes, to augment mapping and routing applications, and to improve gaming experience.

Exploiting these sensors to model driving behavior requires some sophisticated algorithmic processing and machine learning techniques to cope with phone orientation changes, different type of phone sensor characteristics, road type, and more. In this article, we describe how we overcame some of these challenges, and we present the results of comparing our solution with an industrial grade blackbox that is used in the Formula-1 industry.

Phone handling

The National Highway Traffic Safety Administration (NHTSA) estimated in 2013 that driver distraction explained about 18% of all accidents causing injury, and about 10% of all fatal vehicle crashes, costing employers more than $24,500 per crash, $150,000 per injury, and $3.6 million per fatality.

Smartphone apps that detect phone handling generally monitor app usage and screen-on time. However, such approach does not distinguish between real phone handling while driving, and using foreground apps (e.g. Google Maps) without manual intervention while the phone is mounted.

Instead of monitoring app usage, we directly use the phone's sensor data (accelerometer and gyroscope) to model how the phone's movement during phone handling differs from how it moves normally while driving. When the phone is not being handled, it could be positioned in any orientation, could be mounted to the windshield, floating around on the passenger seat or being tucked away in your pocket or hand bag.

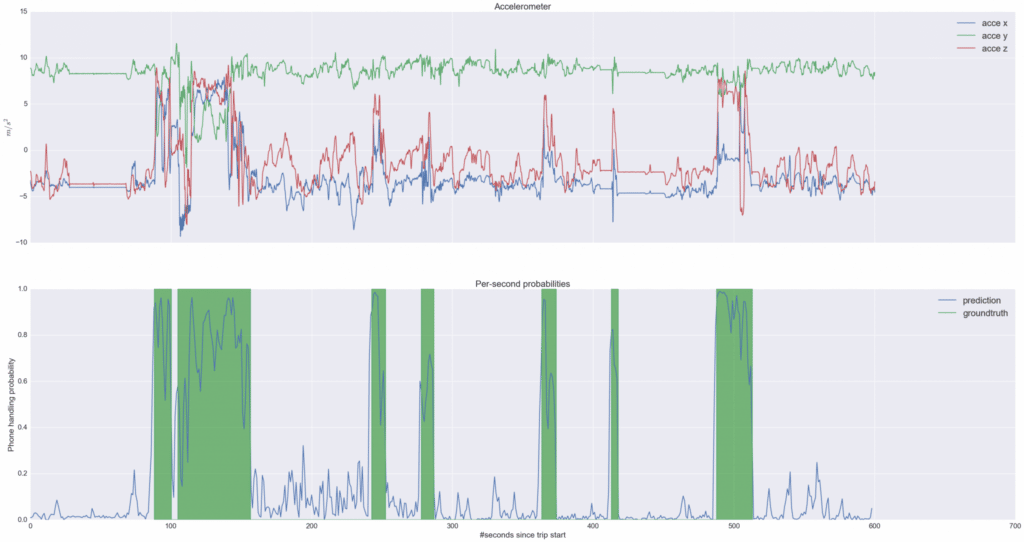

Our sensor analytics pipeline automatically learns to distinguish between real phone handling and other types of phone movements. The following figure shows the accelerometer data from a ten minute car trip, together with the phone handling probabilities produced by our classifier:

Raw accelerometer data (top) and phone handling probabilities (bottom) for a ten minute drive. Green blocks represent the manually annotated ground truth.

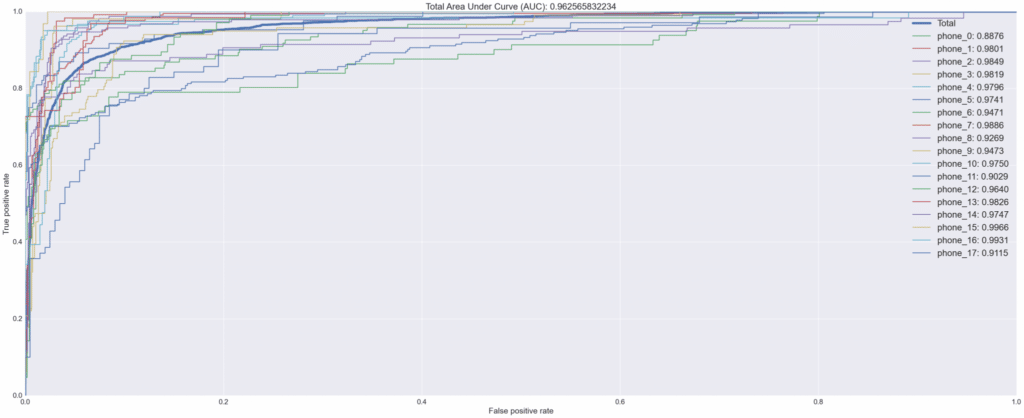

To quantify the performance of our algorithm, we manually annotated hundreds of real-life car trips to train the classifier, and used a small subset to evaluate its performance. The test set only contains trips that were not part of the training set. Also, no other trips from the same users were part of the training set, in order to prevent overfitting on specific user behavior. In line with academic literature, we present the accuracy of our method by reporting the area under the ROC curve:

Area under the ROC curve for the phone handling classification results on our test set. The 'Total' AUC is obtained by concatenating all trips and treating them as a whole. In total, we used more than 30 different types of smart phones, chosen by their global popularity.

The total AUC score of 96% was obtained without any further smoothing. Moreover, our algorithm is able to use both accelerometer and gyroscope if available, but also works fine if no gyroscope data can be sampled (e.g. low-end smart phones). To avoid overfitting on a specific sensor type or phone brand, we used more than 30 different types of phones during both training and evaluation. Finally, state-of-the-art signal processing and data augmentation techniques are used to ensure that the algorithm does not depend on a specific orientation of the smartphone.

Mounted/loose detection

Although knowing when a phone is being handled during a car trip is valuable information for insurance companies, many of our customers reside in the fleet and mobility sector. Fleet management companies or ride sharing platforms usually ask their drivers to keep the phone mounted at all times.

An almost infinite number of mount types are on the market today, ranging from windshield mounts with a long, flexible handle, to small clips that fit into the car's air vents. Each of these mounts allow the phone to be positioned in different orientation and cause different kind of vibrations and noise characteristics to be observed by the smartphone sensors.

Detecting whether or not the phone is mounted is not an easy task, due to infinite types of phone mounts on the market today. Mounts with long and flexible handles cause the phone to vibrate while driving, polluting the accelerometer and gyroscope measurements. Moreover, the length and stiffness of the handle determines the magnitude and period of these vibrations, where long handles act as a low-pass filter on the data. Magnetic mounts cause serious interference on the smartphone's magnetometer, and air-vent-mounts often allow a large in-plane rotation of the phone. Finally, depending on the height of the driver and where the mount is positioned (e.g. corner of the windshield versus attached to a cup holder), the phone's orientation varies greatly.

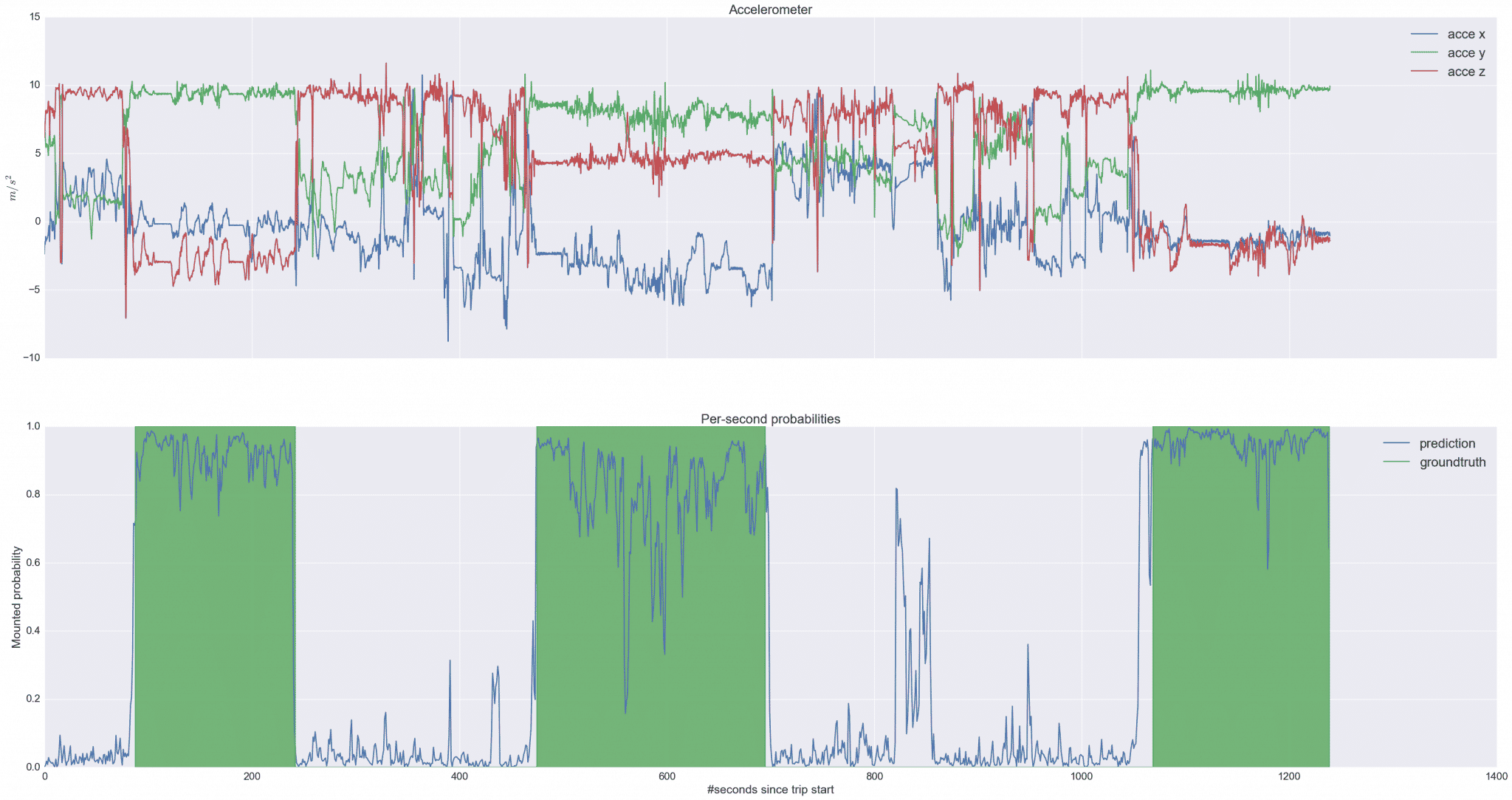

We re-trained and fine-tuned our deep machine learning pipeline discussed above, for the specific problem of mounted/loose detection. The following figure shows the sensor data and resulting classifier probabilities for a twenty minute trip:

Raw accelerometer data (top) and probabilities that the phone was mounted (bottom) for a ten minute drive. Green blocks represent the manually annotated ground truth.

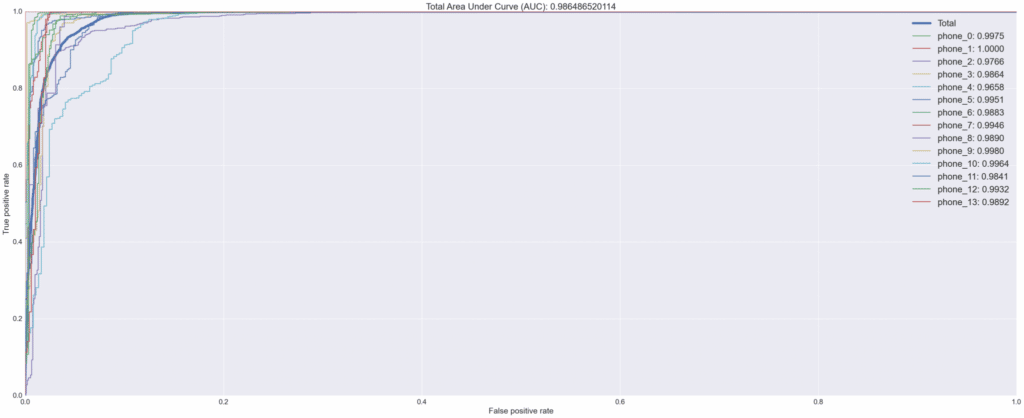

Similar to the phone handling classification problem, we gathered and manually labelled hundreds of trips with different phones, cars and drivers, testing different road types in several countries. We also tested about 30 different mount types and many more mount positions and phone orientations, in order to make sure that our method would not overfit on a single type of mount. The following figure shows the ROC AUC score achieved by our algorithm:

Area under the ROC curve for the mounted/loose classification results on our test set. The ‘Total’ AUC is obtained by concatenating all trips and treating them as a whole. In total, we used more than 30 different types of smart phones (represented equally in our dataset), as many different types of mounts, and many different mount positions and phone orientations.

The total AUC score of almost 99% allows us to combine this classifier with the phone handling classifier in order to further reduce the false positive rate. Combining both algorithms result in an accurate view of how the user treats his phone while driving; an insight that can not be obtained by simply monitoring app usage or screen locks.

Driving event detection

To get a precise insight into the driving behavior of the users, we developed a machine learning pipeline that allows the smartphone to be used directly as a replacement for expensive black box Inertial Measurement Units (IMU). As opposed to many recent smart phone based driving behavior approaches, we are able to accurately distinguish longitudinal forces (i.e. acceleration and braking) from latitudinal forces (i.e. centripetal force during turns) and vertical forces imposed by road bumps.

Moreover, we can cope with arbitrary phone orientation changes and phone handling, and do not depend on high frequency GPS signals, which are often unreliable and battery draining. Instead, our methods are based solely on smart phone sensor data. In the following paragraphs, we dive deeper into some of the difficulties that are encountered when coping with smart phone sensor data.

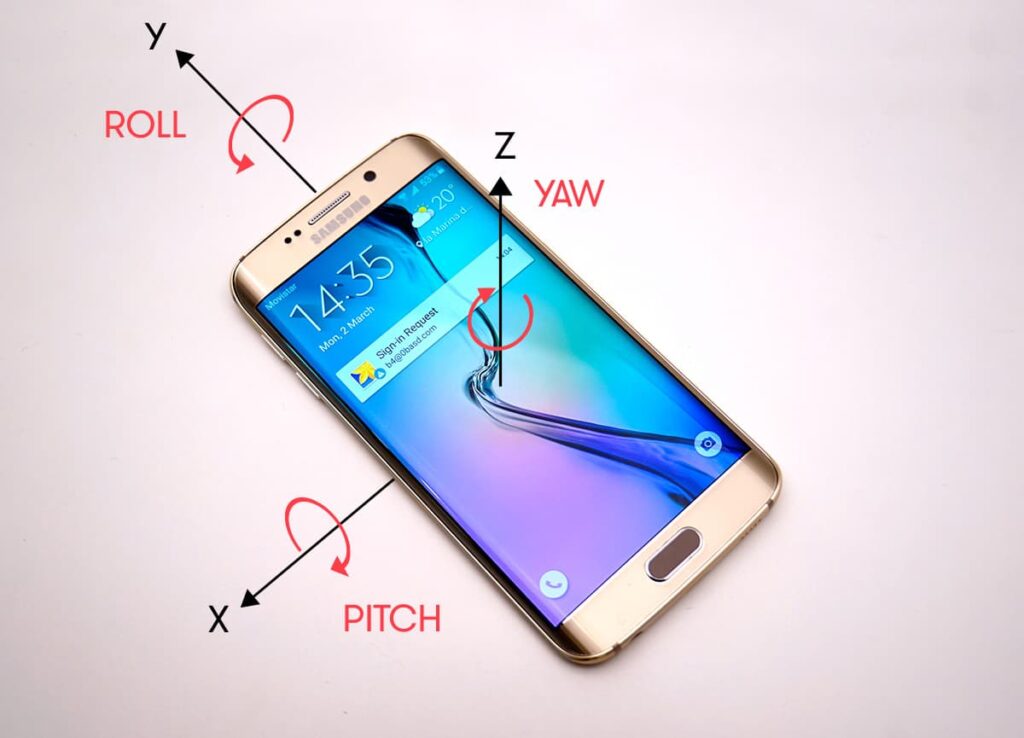

A first major difficulty, is the dynamic estimation of the phone's orientation. A phone can be positioned in any arbitrary orientation, and the orientation might change at any time during a trip. For simplicity, we will express the phone's orientation using its yaw, pitch and roll angles in this article:

The phone's orientation can be expressed using Euler angle notation and is defined by its Yaw, Pitch and Roll.

Note, however, that in practice, a more complicated representation is used, because Euler angles are known to suffer from the so called gimbal lock problem. However, diving into the specifics of rotation matrices, axis-angle representations and quaternions would lead us too far.

The first step in our pipeline essentially tries to estimate the phone's roll and pitch angles, relative to the earth's surface. Once these are known, we then estimate the yaw angle, relatively to the driving direction. This last part is important, since the absolute yaw angle changes every time a driver takes a turn, whereas the relative yaw angle uses the car itself as a reference coordinate system. Both steps are performed in real-time, are continuously re-estimated, and are achieved without leveraging the GPS sensor.

To obtain an estimate of the roll and pitch angles, gyroscope and accelerometer readings are combined by our sensor fusion solution. Whereas gyroscopes are known to exhibit long-term drift, accelerometers are known to exhibit a large amount of short-term noise. Dynamically fusing both sensor readings, allows us to combine the best of both worlds. Moreover, accelerometers also measure the influence of gravity, even when there is no acceleration at all. We use this information to further improve the orientation estimate by assigning a higher belief to the gyroscope readings during large accelerations and brakes, while assigning a larger belief to the accelerometer readings in other cases. These beliefs are updated continuously by a probabilistic dynamical system.

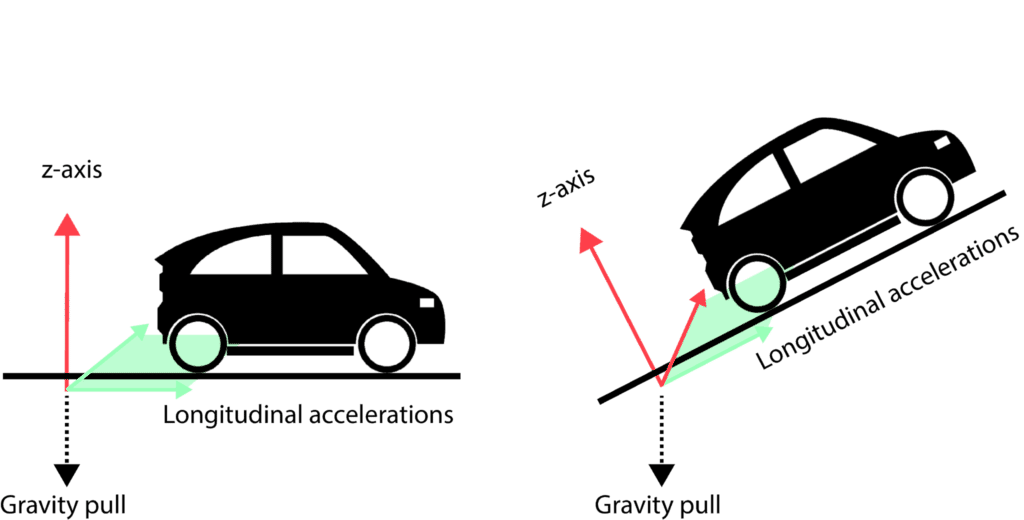

This method also allows us to cope with hills, which often induce incorrect acceleration readings in black box IMU approaches. The reason for this is that the gravity pull changes axes when driving uphill, as illustrated by the following figure:

When driving on hills, the gravity pull affects multiple axes at once, causing many black box IMU devices to record incorrect accelerations.

Dynamically estimating the phone's orientation allows us to remove the gravity pull from all axes, resulting in true linear acceleration estimates, independently of the phone's motion and orientation.

Note: Both Android and IOS provide linear acceleration estimation API's, often used by app and game developers. However, these estimators are coarse and unreliable for the use case of driving behavior. The algorithms used internally by Android and IOS are simplistic complementary filters, designed to be executed 'on device' with low latency, and optimized for gaming use cases (i.e. assumptions are made about the signal characteristics that are largely violated when recording in-car data). Our solution overcomes these shortcomings, enabling accurate driving behavior monitoring.

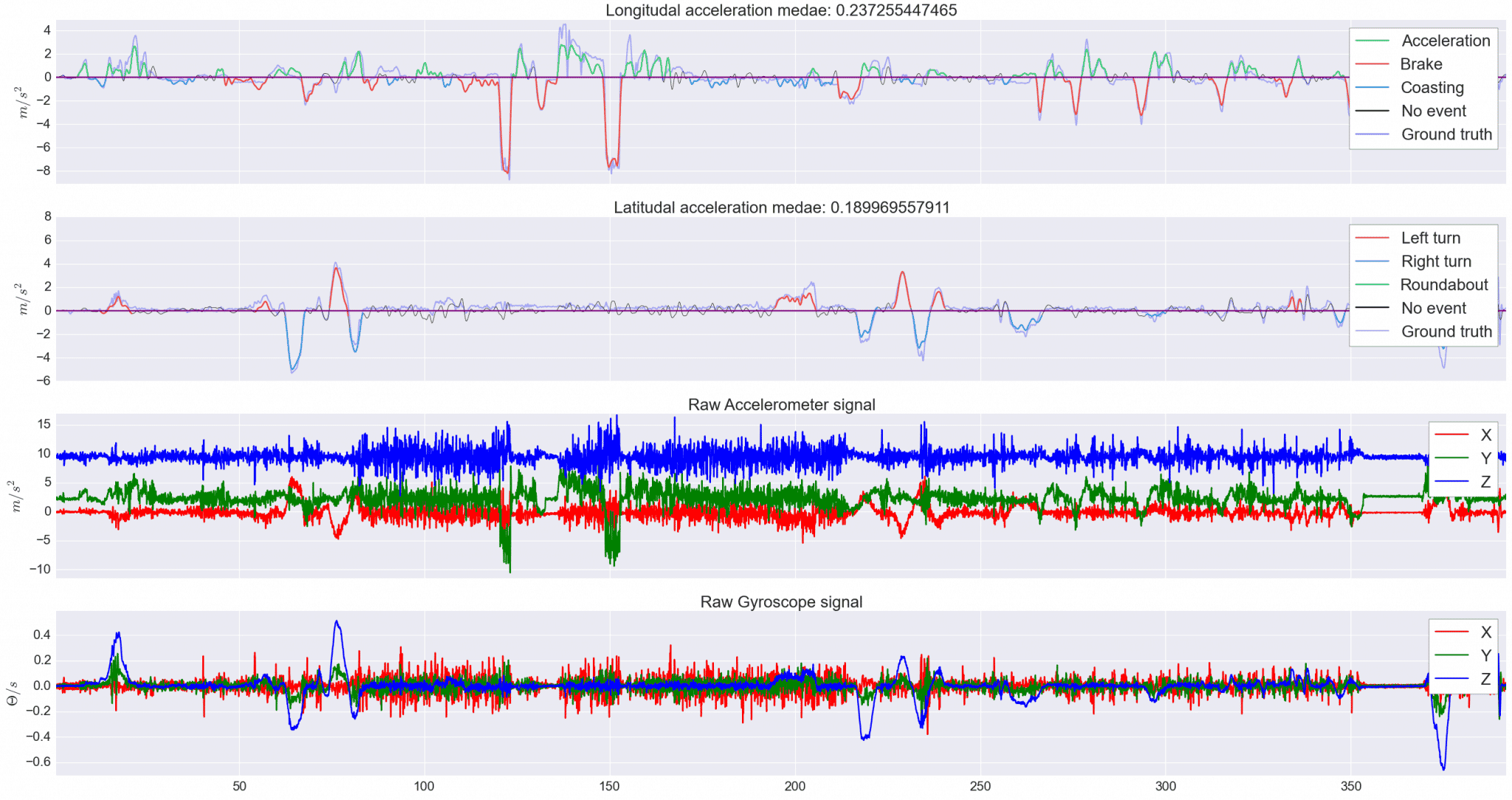

To evaluate our method, we compare the longitudinal and latitudinal orientation estimates with an industrial IMU, used by Formula-1 racers. The following figure shows the estimated latitudinal and longitudinal forces after phone orientation normalization, together with the IMU black box ground truth. Accuracies are reported using Median Absolute Error:

The purple curve corresponds to the IMU ground truth. The IMU was mounted to a fixed position, aligned with the car's driving direction. Accuracies are reported using Median Absolute Error.

This figure illustrates that our method performs on par with an expensive black box solution, and possibly even outperforms it. The latitudinal acceleration from second 100 until second 200 is not measured correctly by the ground truth IMU black box, which clearly exhibits a small bias, whereas our smart phone based method does not. Moreover, the MAE of approximately 0.2 is much lower than the inherent noise level of typical accelerometer sensors.

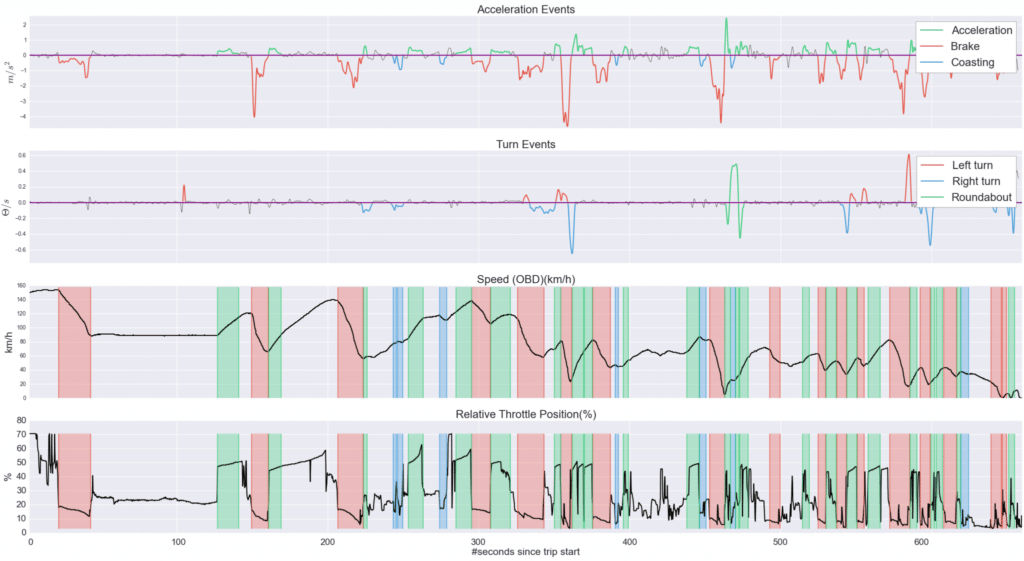

To further qualify the accuracy of our algorithms, we compared a large set of test trips with data captured through the car's On Board Diagnostics (OBD) bus. By comparing our acceleration measures directly to the car's OBD velocity and throttle position reports, we show that our event detection, based on the normalized sensor data, accurately reflects the user's driving style:

By comparing OBD data to our detected events, we further qualify the accuracy of our algorithms. For example, note how the acceleration around second 80 consists of three parts where the driver probably switched gears. This is reflected directly in the OBD throttle position ground truth, and is measured correctly by our algorithm.

Driving behavior scores

To be able to compare, cluster and analyse a user's driving behavior, we defined four types of driving behavior score:

- Efficiency

- Based on the magnitude and duration of the events; i.e. the G-force encountered by the driver and his passengers while accelerating or braking, and the centripetal force encountered during turns.

- Anticipation

- Based on the time between subsequent events; i.e. represents how smooth a user drives, and models his ability to anticipate traffic conditions. The anticipation score represents sequences of events, such as braking before versus during a turn, coasting versus braking in front of a traffic light, etc.

- Phone usage

- Related to the number of times a driver uses his phone while driving.

- Legalness

- Represents the number of speed limit violations. We developed an accurate, in-house map matching solution, specifically designed to handle low frequency GPS fixes.

These scores are normalized using robust statistics, based on a large population of drivers. As a result, a score of 50% on any of these measures, indicates that a driver behaves average. A higher score is achieved by users that drive more careful than the average driver.

Each of these scores is reported for different types of roads, which we detect automatically as well:

- Highway

- Non-highway - within the city

- Non-highway - outside the city

Normalization relative to the population of all drivers is done per road type, as driving behavior within a city usually differs quite a lot from driving behavior on the highway. Moreover, normalization is geographically constrained, such that different driving styles are taken into account, depending on the country of origin.

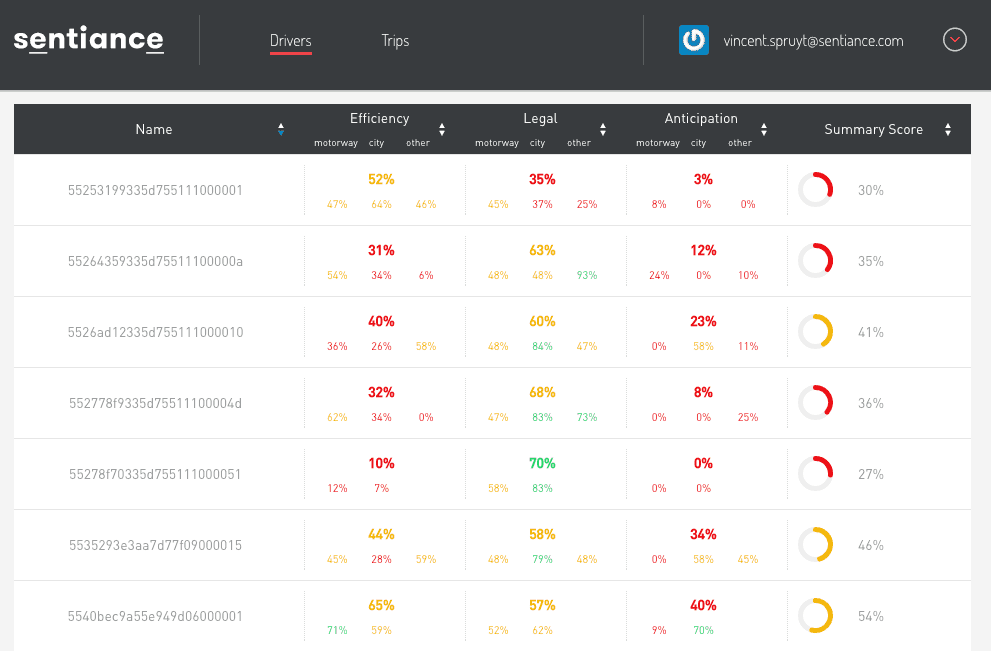

These scores and driving profiles are summarized in our fleet dashboard, made available to our commercial partners:

A screenshot from our fleet dashboard. This dashboard is made available to our commercial partners, together with a mobile SDK and an API that allows you to query the driving behavior details programmatically.

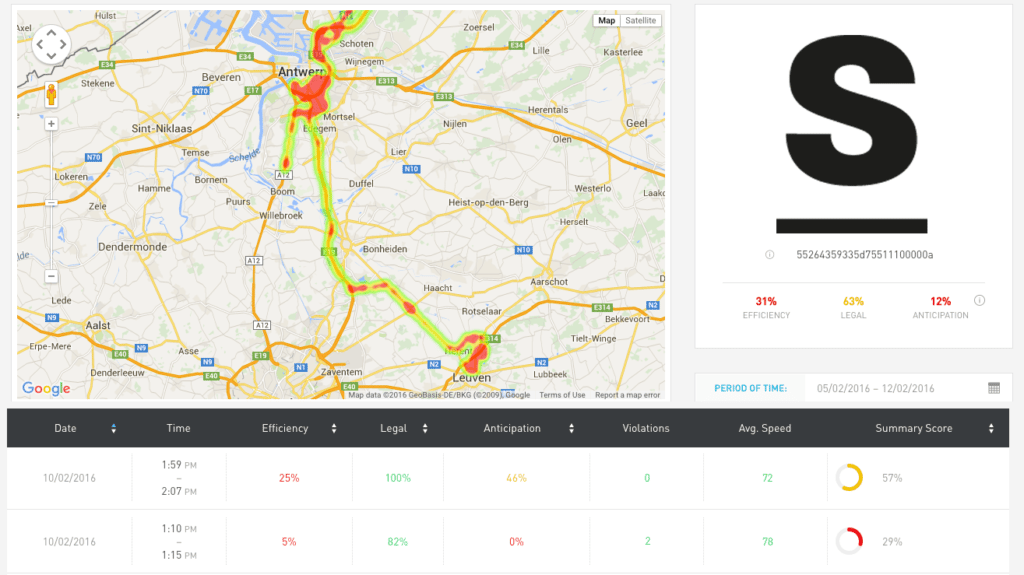

The dashboard also allows our customers to dig deeper into the driving behavior of specific users, which is often useful for fleet management and driver coaching:

Apart from general segmentation, driving behavior for specific users can be queried too. This is often useful for fleet management and driver coaching.

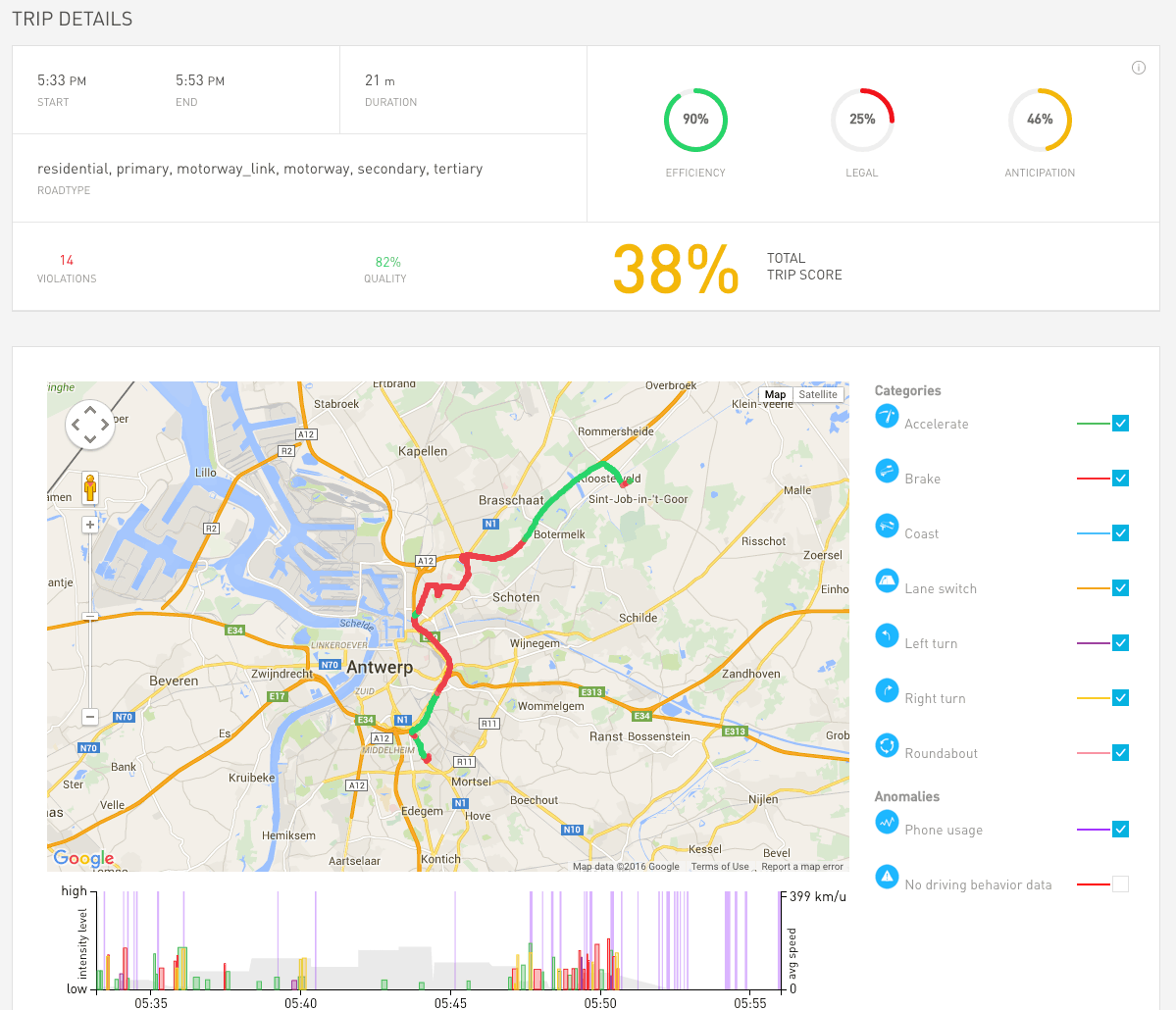

Finally, specific trips and their detailed events can be visualized:

Specific trips can be analyzed in detail to discover why a certain trip score was obtained. Events are mapped to the trajectory and can be enabled or disabled interactively.

We developed a light weight mobile Software Development Kit (SDK) that can be embedded into any Android or IOS app with only a few lines of code:

@Override

public void onCreate() {

super.onCreate();

Sdk.getInstance(this).init(config);

}

Context detection and behavioral segmentation

At Sentiance, we consider driving behavior to be a specific aspect of user behavior in general. Our technology is able to dynamically discover patterns in a user's day to day behavior. Based on these patterns, we automatically detect the user's home and work locations, his points of interests (i.e. locations often visited and where a lot of time is spent), his mobility habits, and his semantic time.

Semantic time differs from calendar time, in that it is specific to each user. One user's semantic morning might start at 08h00 AM, whereas another user's semantic morning might start at 07h30 AM. More extreme, a person who works at night and sleeps during the day could have a semantic morning that starts at 23h00 in the evening. Semantic time thus corresponds to a user's biological clock, and depends on his lifestyle.

The concept of semantic time helps us to cope with various behavioral patterns (e.g. night workers versus day workers), and allows our customers to target their user segments at the right time, in the right place and context. Combining behavioral segments with real-time context detection and semantic time, yields an extremely powerful platform that is currently being used by customers in different sectors, ranging from fleet management to ride sharing, UBI, smart cities, healthcare, IOT and marketing.

If you want to know more about our general context detection and behavioral profiling capabilities, stay tuned, as we will soon devote a complete article to this exciting part of our technology!

Conclusion

In this article we described some of the inner workings of our driving behavior modelling algorithms, and showed several real-life examples and results. We evaluated the accuracy of our methods, and showed that they perform at least as accurate as black box IMU approaches.

Furthermore, we discussed some of the advantages of a smartphone based UBI solution compared to black box alternatives, and briefly indicated how our methods are used today by our customers in the fleet and mobility sector.

Finally, we presented our commercial solution, containing an easy to integrate mobile SDK, a REST API allowing you to dynamically query our intelligence layer, and a set of dashboards for analytics purposes.

For more information, you can download our demo app, Journeys, or connect with one of our sales representatives!