In this blog post, we show how to put into practice basic privacy principles within the scope of scale-up, and in a context where we process vast amounts of personal data in the form of location data coming from mobile phones. It is our vision that in the future, motion insights will contribute to solving some of today’s global problems pertaining to mobility, sustainability, and healthier lifestyles. Our ambition is to be the most complete provider of motion insights that are processed and used in a privacy-friendly way.

Data privacy today

Today, many organizations don't succeed in understanding their customers well. They struggle with capturing data from their customers in a way that doesn’t impact trust. Nonetheless, they will have to solve that problem if those organizations want to stay relevant, innovate, and meet their customers’ expectations. Our answer to this problem is a platform that turns sensor data from mobile phones into actionable motion insights that are subsequently used by our partners, i.e. organizations and companies that want to create an impact with their products and services. It is our strong belief that personal data and AI should be used for good.

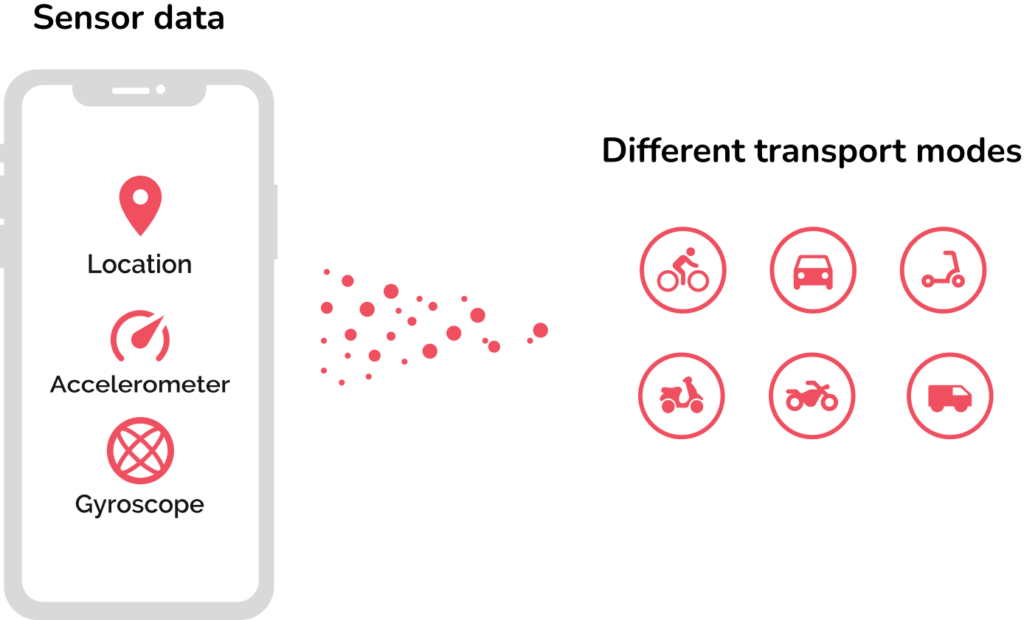

The way we work is simple. We provide a piece of software, our mobile SDK, that goes into the app of our partners who deploy the app to their customers. The SDK is responsible for registering movement. If a person moves over a certain distance, the SDK wakes up and starts collecting sensor data. This process continues until the person arrives at their destination. When their location no longer changes significantly, the SDK concludes that the user has become stationary and stops collecting the sensor data.

The sensor data is constantly evaluated and enriched, which leads to the motion insights mentioned earlier. From undeclared sensor data, i.e. location fixes and measurements from a phone’s accelerometer and gyroscope, we can produce insights about a person’s mobility profile and lifestyle routines, and optimize to engage the users.

Why a mobile phone? It’s a good proxy for human behavior, and it has become the trusted hub for all consumer transactions. It’s clear we need to take strong measures to protect consumers’ privacy and maintain that trust.

The need for a Data Protection Officer (DPO)

Having the means to learn about a person’s day-to-day behavior requires Sentiance to have a dedicated DPO. As a scaleup with a limited budget, this led us to look internally within our team for someone who could be trained to take on that role. And so it happened, we sent our most suitable candidate, our VP of Finance for DPO training, from which he returned with a clear message: the DPO is an independent role within the organization and cannot be combined with a finance role due to a potential conflict of interest.

As a result, our current DPO is external yet very involved with our activities. However, having an internal resource (VP of Finance) take formal DPO training allowed us to internalize the knowledge and understanding of data protection legislation and privacy principles, a benefit we enjoy very much today.

Our role as a processor

Internalizing knowledge means it needs to be distributed to the teams. That’s why in our annual training we focus on clearly explaining our role versus the role of our partners. We process personal data on behalf of our partners in the legal capacity of a Data Processor. This role is anchored in our contracts, which always include a data processing agreement that clarifies our roles and mutual obligations. We explain our role and the importance of personal data to the team using simple terms that everyone understands. For instance, “If Sentiance were a bank, personal data would be the money customers deposit with us”.

Location data and anonymity

Metaphors help explain the basics and when you sign your first big partner; you need to put them to work. Clarifying our role towards our partners is one thing, understanding the sensitivity of the data that is being processed is another. Despite the many awareness and training sessions for the team, it isn’t always clear what constitutes personal data. A typical and, unfortunately too often discussion goes as follows:

“We don’t collect personal data. It’s anonymous sensor data."

"What can you do with that location data alone? It’s not PII, right?”

The location data collected and processed by the Sentiance SDK is indirectly linkable to an individual using external data sources. We learn about the home and work locations of individuals and the places they visit. Social media provides enough external information to link those data points back to an individual. We don’t collect traditional PII like name or date of birth, and we use pseudonymous identifiers to refer to individuals. Our data is pseudonymized and we take a clear stance that location data, at least in the form we collect and process it, cannot be anonymized.

What do we consider anonymous data?

- Aggregated statistics to improve our models are one example. For instance, the average speed of a group of users, derived from their individual location fixes, on a specific road is anonymous data. It does not link back to an individual but can be used to improve our speeding score or map-matching algorithms.

- Another example is short segments of sensor data from a mobile phone’s accelerometer. With those segments, we can improve our transportation classification functionality and have a model, which again, no longer contains individual data.

The discussion around the anonymity of the data we process comes back now and then. The only way to deal with this is to repeat the message that the data we process cannot be anonymized. Every year in our training we focus on the definition of personal data and the potential impact of exposing location data. Recent examples and cases of misuse of location as mentioned in the press are a great help.

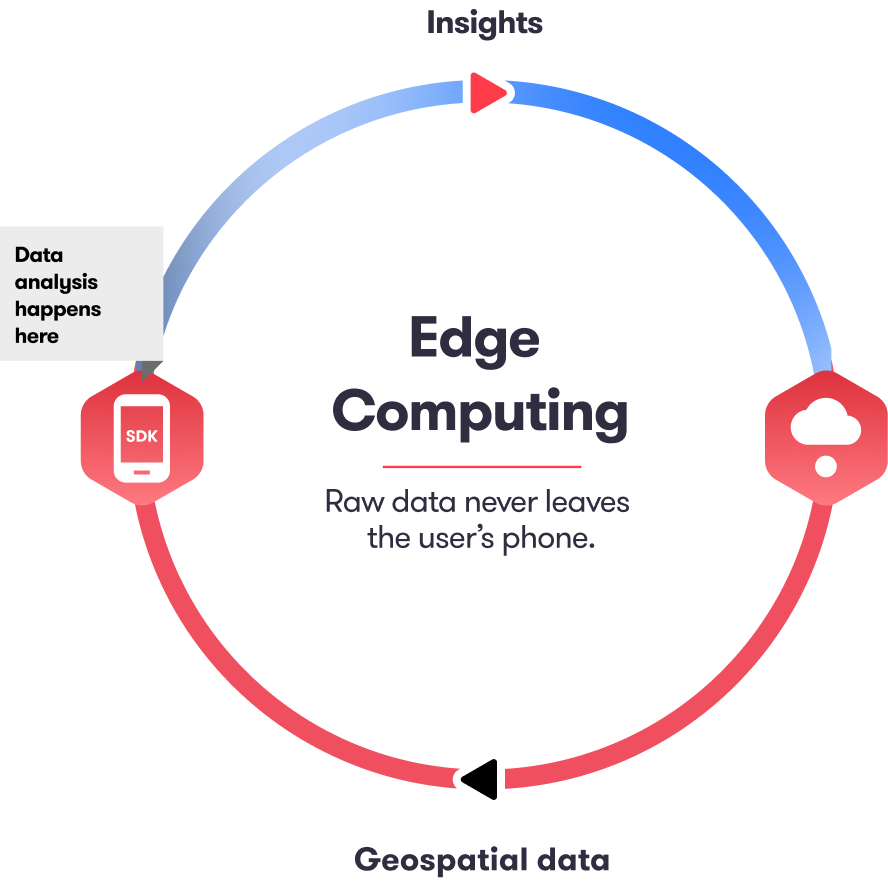

The road to on-device

On-device data processing is the next level for data privacy. Instead of sending the data gathered by our SDK to the cloud we are working on moving all components on edge so that personal data stays on the device and aggregated insights can leave the device for those use cases that require it.

The Sentiance SDK stays secure in your app. With edge computing, the data is collected and processed directly in the app, never leaving the user's phone.

Edge computing also reduces the overall cloud-based server footprint significantly, with no high hosting and storage costs, making it today's cost-efficient solution. This way we can ensure privacy, help you build better products, and increase ROI.

When things don't go as planned

Despite all precautions and measures taken, sometimes things go wrong. Once a customer’s personal data ended up in the wrong location! What was the cause? Human error. How did we come to know about the incident? The person who made the mistake reported it immediately. In a small organization, you depend on the people in your team to raise such issues. Through awareness training, you try to create a reflex within the team to report incidents as soon as they see one, even if they are involved themselves. When an incident presents itself it's all hands on deck. This is where an organization is put to the test and the importance of proper incident management in such situations cannot be overemphasized.

Of course, sometimes incidents happen that are not immediately seen or reported. That’s where vulnerability scanning tools or activities such as penetration tests or bug bounty programs help. When incidents are observed with some delay, it's a matter of reconstructing what happened. This requires you to be able to (virtually) go back in time. Therefore, we have an extensive and effective logging policy that requires all access to systems and especially APIs and the like, to be logged and that that data be kept sufficiently long.

GDPR articles 33 and 34

The importance of logging capabilities becomes even clearer when you need to start communicating about an incident. At Sentiance, we have a very open communication policy toward our partners. Not only is it a contractual requirement, but it’s also who we want to be: we do not want to hide when things go wrong. Instead, we show that we can handle such incidents.

Communicating about a breach with customers is not easy. Deciding to inform affected individuals is even less so. Reporting to the authorities is yet another decision that requires careful consideration and consultation with the client. Filing a notification to the authorities of an incident that involves customer data is eventually an obligation that lies with our partners in their capacity as Data Controllers. Hence the need for a clear alignment of roles and responsibilities.

There are subtle differences in GDPR articles 33 and 34 that describe the decision criteria to communicate about a breach to data subjects and to notify the data protection authority, respectively.

According to article 33, a control shall notify a personal data breach to the supervisory authority, "unless the personal data breach is unlikely to result in a risk to the rights and freedoms of natural persons". Article 34, however, requires communication to data subjects "when the personal data breach is likely to result in a high risk to the rights and freedoms of natural persons".

These articles raise many questions. What is high risk? Does a single email address that was exposed imply high risk? These are difficult questions to answer. Small organizations should not hesitate to consult a third party for external advice. And how likely is “likely”? This is where an effective logging policy can help to deliver conclusive proof that no one accessed the data.

Taking preventative measures

Taking measures to prevent incidents is important, but one should not expect to avoid all of them. Security incidents are normal and they happen. What matters is how you deal with them and how you reduce the impact.

At Sentiance, we focus our information security and privacy management on 3 priorities:

The Sentiance data charter

Sometimes you make mistakes, other times you must make choices. We are not in the business of selling data and when we receive a request to buy the personal data we process (even if it’s from our demo applications), we firmly decline. We also declined certain customer use cases in the past. If personal data and AI are not used for good, we don’t want to proceed. Such a decision is not always straightforward. To guide the team, we wrote our data charter and we use it as a moral compass in our day-to-day decision-making. In the data charter, we state that we work on the legal basis of consent and stress all the principles around fairness and transparency. There must also be a clear benefit for the end user. Our goal is to lead by example and make this clear to our customers.

Changing mobile ecosystems

Fortunately, we do not have to rely solely on our data charter and our conscience. The mobile ecosystem, i.e. mobile operating systems and app stores, pushes app developers toward more transparency. Commercially, this may initially create friction for companies trying to use personal data to offer a better experience to their users. However, in the long term, privacy becomes a clear value proposition. At Sentiance, we help our partners obtain consent from their users in a correct way through our location permission playbook, which puts the focus on value exchange and transparency.

Global ambitions and implications

Today, Sentiance operates in over 30 countries. Being a European company has advantages when you have international ambitions, but sometimes also gets in the way. In our role as a processor, we have a data processing agreement in place with all partners. Such an agreement governs the processing of personal data between the data controller (our partner) and a data processor (Sentiance). The DPA makes extensive reference to GDPR, which is irrelevant for non-European customers wanting to process data of non-European residents. Hence, those customers sometimes ask the (valid) question of why they have to comply with GDPR. They don’t, but we do.

Internationally, we are also dealing with a rapidly changing regulatory landscape. Countries have their own data protection laws like CCPA in California, while others have adopted the GDPR, like the recently approved law in Indonesia. Other customers come with sector-specific rules. It is an ongoing activity within Sentiance to follow up on those evolutions.

Data subject rights requests - annual test

Under GDPR and other data protection laws, data subjects have legal rights. As a data controller, you have an obligation to honor those rights. As a processor, you have an obligation to support the controller in meeting its obligations to respond to those requests. Sentiance partners can handle most requests autonomously through our API. They can make a request to retrieve all available data, but also to delete all data and stop the collection and processing of personal data.

In addition, our support team handles offline requests received via email. For offline requests, the support team refers to the GDPR playbook in which we define different types of data subject rights requests and the steps to follow for each of them. To ensure the playbook is known and executed correctly, we organize annual data subject rights tests. After several iterations, the team has gotten so efficient in handling those requests, that they are typically resolved within 1-2 business days. And we measure the response and handling time of those requests to keep them honest.

So there you have it. Data privacy is no laughing matter. We’re here to address those existing needs today and anticipate dire needs for tomorrow. Check out our Privacy page to learn more.